What will the Covid-19 pandemic look like from the future? Perhaps the exceptional imagery will be most lasting: personless cityscapes, protesters in masks (and anti-mask protesters), the empty shelves where toilet paper used to be, death-count infographics, and denialist comment threads. But for the middle class and its remnants, those who worked from home, this period will be remembered with a different array of images. Theirs was a narrower horizon: a semipermanent Zoom meeting with colleagues in AirPods and athleisure, interrupted by the occasional surprise of a pet or a child. Exile from the office has been cast as one of this plague’s few consolations—and, we are told, a transformation that is already set in semipermanence.

By April 2020, 62 percent of American workers had switched to home-based work; many of the rest had occupations that were too manual or too essential to perform remotely. Some companies, like Twitter, sent all of their staff out of the office indefinitely, announcing a “forever” policy that would extend beyond the contagion period. This grand experiment in teleworking has been examined in a rash of think pieces; they tend to strike an optimistic note. “Major corporations will readily adopt Twitter’s model,” said Forbes, citing worker productivity, real estate savings, environmental concerns, work-life balance, and reductions in commuting time.

If these arguments in favor sound familiar, it is because some are already generations old. For decades, advocates of telework have faced a paradox. All the upsides Forbes listed are genuine: They are the benefits of the practice so intuitive as to be almost self-evident. Yet widespread adoption of remote work has remained, until now, an impressively stubborn failure. Ambitious forecasts of its uptake, made by business analysts and futurists like Alvin Toffler, who wrote The Third Wave, a 1980 book about society’s transition into the “information age,” were thwarted. Toffler predicted the widespread advent of home computer terminals, but not their use. He imagined the home PC as a sort of digital hearth in an “electronic cottage,” a socialist-entrepreneurial hybrid zone where familial teams would achieve “increased ownership of the ‘means of production’ by the worker.” “If as few as 10 to 20 percent of the work force as presently defined were to make this historic transfer over the next 20 to 30 years,” he wrote, “our entire economy, our cities, our ecology, our family structure, our values, and even our politics would be altered almost beyond our recognition.” Instead, the academic Patricia L. Mokhtarian found, the portion of workers staying at home shifted from around 2.3 percent in 1980 to just 5.3 percent in 2018.

Moreover, some high-profile adopters of remote work even recanted. In the mid-2010s, companies like IBM and Yahoo began calling their employees back into the office. IBM, formerly one of the most telework-friendly companies in the world (in 2009, some 40 percent of its 386,000-strong workforce were employed off-site), spent $380 million on offices with movable desks and walls you could write on. In 2013, the CEO of Yahoo, Marissa Mayer, announced a ban on employees working remotely, arguing that when people come together, they’re “more collaborative and innovative.” Though later she worried this ban would become her defining legacy, she felt vindicated by the increased productivity at her company. Other companies, notably Best Buy, followed Yahoo’s lead.

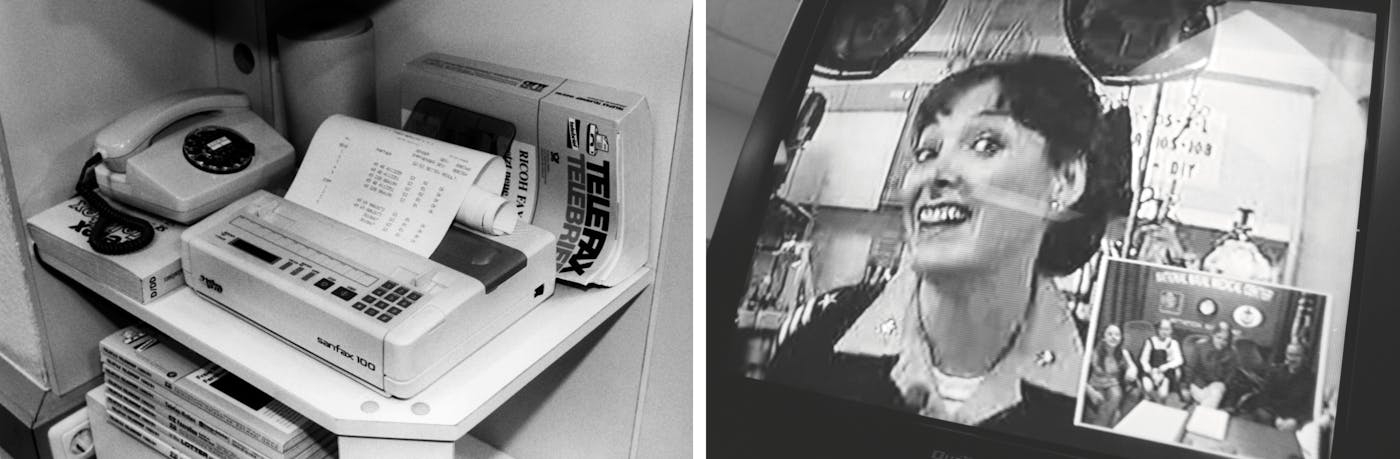

The pattern of modern-day work Yahoo returned to—in which employees commute to an office—has proved resistant to technological and social change. It outlasted the invention of the fax machine, the internet, and videophones; the decline of former industrial regions; stratospheric real estate prices in coastal megacities; globalization; climate change; air pollution; record commuter congestion; and failing subway systems. Each of these transformations could have nudged the business world toward remote work; none did. “The impending ubiquity of working from home,” as Mokhtarian put it, almost remained impending forever. Mokhtarian has collected 40 years’ worth of failed media reports that feel like rehearsals of the present moment. Any major unforeseen or disruptive event, from hurricanes to the Olympic Games, returned telework to the news. “Telecommuting Takes Off After Quake … Most employees welcome the change,” the Los Angeles Times reported after the city’s 1994 earthquake, one of many catastrophes supposed to liberate commuters for good.

Visions of this telecommuting future were brightest in California. It was (and still is) a state where technological innovations jostle against a grinding, car-based commuter culture at odds with the regional predilection for personal freedom and an easy lifestyle. Engineers would spend their mornings staring at a bumper, and arrive at the same thought by the afternoon: There has to be a better way. One of them, Jack Nilles, provides the origin story for the term “telework.” In the 1970s, Nilles, who worked at NASA, moved to Los Angeles and found himself exasperated by the traffic. Not only was it a waste of time, it was a waste of energy, and the oil crisis had made energy conservation, particularly of gasoline, a national priority. Nilles and his team came up with a series of proposals to abolish or curtail commuting. Instead of workers traveling from their homes to centralized business districts, they would drive, walk, or cycle a shorter distance to purpose-built extra-metropolitan offices. These would be wired and linked as telecommunications hubs.

Most thumbnail histories are simplifications, but this one is unusually misleading. Nilles himself has added many qualifications to his “father of telework” mantle, pointing out that in his prototypes, employees still physically commuted to a work location—the buildings were merely suburban rather than metropolitan. Less remarked on is that teleworking in this form already existed, both as a theory (versions had been proposed in science fiction as early as 1909) and as a practice. Nilles’s own employer, NASA, had done something similar in the 1960s.

These innovations were prefigured by an even older thread of beliefs and practices that ran right through the twentieth century. They urged work to return to its rightful place in the home, through cultural modifications and the assistance of technology. In 1964, the science-fiction writer Arthur C. Clarke (himself an early advocate for telecommunications satellites) minted the motto “Don’t commute—communicate!” With widespread acceptance of this maxim, Clarke said, civilization could expect the “disintegration of the city” by the year 2000. Almost any executive skill, any administrative skill, even any physical skill, could be rendered independent of distance.

The prospect was not merely speculative. Throughout the Apollo mission, NASA officials had established a set of telecommunications networks that linked their own meeting rooms with the offices of key contractors, pairing fast and slow facsimiles with voice conferencing. The result was an early and effective form of telemeeting technology. Other organizations were more advanced still: In the United Kingdom, the post office built a sophisticated interoffice teleconferencing system that was finished in the late 1960s. Called “Confravision,” it could relay sound, images, and diagrams drawn on a blackboard, but was hampered by cumbersome technology and expensive transmission. In inflation-adjusted terms, a multiexecutive meeting cost more than $4,500 an hour to broadcast two ways. The price was cheaper than business travel (or building new offices), but, not surprisingly, the “demand” its designers banked on never materialized.

By 1972, the year the word “telework” was coined, researchers at University College London had already completed a four-year study on the psychology of telecommunications. They compared different forms of meetings mediated by technology—some audio-only, others a combination of sound and image. The findings were published in New Scientist. Audio teleconferencing was suitable only for “exchange, brainstorming, cooperative problem-solving, or routine decision-making.” Bargaining or “getting to know people” required video, which was still not as good as face-to-face meetings. While the energy crisis was the most urgent factor driving their work, they recognized more subtle variables. The “sociological consequences of limiting some kinds of contact,” for example, might hamper the uptake of remote meeting technology.

In the decades to come, these sociological consequences proved more deep and intractable than anyone had imagined. Some obstacles were foreseeable. The researchers predicted the “generation effect,” where easier, zero-cost communication would increase internal messaging to unsustainable levels. This problem struck company email systems almost as soon as they were rolled out. When IBM switched to intra-office email in the early 1980s, the $10 million mainframe powering it was overwhelmed in a week. As the technology writer Cal Newport has detailed, IBM workers “began to communicate vastly more than they ever had before.” Engineers had estimated the required computing power from the number of paper memos exchanged. Any productivity gains were drowned in a flurry of ephemera.

By the late 1990s, when it was already clear that optimistic forecasts of telework adoption would not be met, the California Polytechnic State University academic Ralph D. Westfall compiled a list of the reasons. Some were clear: The productivity gains touted were dubious, and teleworkers were drawn from a pool of the self-motivated anyway. Others were more obscure. The internet, which offered remote access to documents, files, and images, seemed an ideal aid for telecommuting, and its arrival should have impelled a decisive shift, but Westfall described some unseen countervailing force. The persistently low number of true telecommuters suggested that “other, less obvious factors” were keeping people in offices.

Westfall named one of these factors “illegitimacy.” Working remotely could help employees resist the totalizing nature of work, but their absence also meant they could be viewed as “not really working.” Absent employees risked being viewed as less dedicated, or suspected of trying to shirk their duties. Curiously, Westfall found, telework was seen as “real” only when it was associated with health issues. “Telecommuting becomes more legitimate,” he explained, “if there is an ‘excuse’ for it, a connection with something that is recognized as an acceptable reason for being away from the office.” It needed a drastic, worldwide “excuse” predicated on just the issue that Westfall identified—the health of the global populace—to finally shift corporate culture decisively.

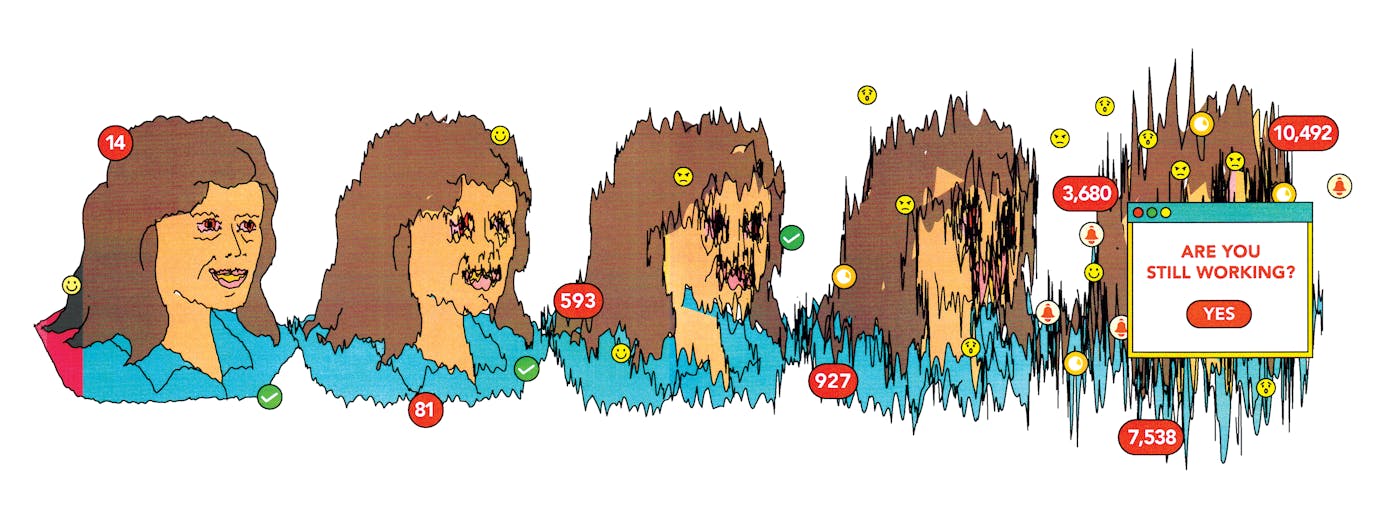

Once realized, widespread telework turned out to be a mixed blessing. After weeks of Covid confinement, workers on social media joked that they were “living at work rather than working from home.” Underneath the irony was a trenchant observation. Nilles and those before him expected more than mere energy saving and worker productivity. They wanted to return labor, in time and space, to the sphere of a worker’s own influence. These pioneers did not imagine that so much saved time would only be expended on more work (much of it uncompensated), and they were not alone in this error. Many of the twentieth century’s eminent thinkers on economics, John Maynard Keynes among them, assumed that increased labor productivity would result in more personal time. Instead, remote work became another front in one of the defining battles of twentieth-century labor economics: the defeat of leisure.

The dream of a tech-enabled leisure boom also foundered on the exact nature of the proposition. In academic study, thanks to definitional issues, accurate estimations of telework are notoriously difficult to arrive at. Telework, telecommuting, mobile workers, home workers, after-hours telecommuting, and telecommuting centers are all distinct but frequently overlapping phenomena. But “doing work” and “going to work” are also very different things, and in the West it is the latter that has defined modern life and modern lives, along with the labor conditions, cities, relationships, and patterns of movement that make them up.

Perhaps the most energetic set of ideas underpinning remote work came from architecture, especially anti-metropolitan architecture. While Frank Lloyd Wright hated cities intermittently, his loathing of commuting was constant. Wright’s designs for Broadacre, his utopian anti-metropolis, included factories but no office skyscrapers. White-collar workers were expected to work from home, and, in both his writing and in his own working life, Wright encouraged this practice. His Oak Park, Illinois, home had a private office, library, and reception hall, and he later added a studio, which at its peak had 14 “associates,” on the understanding that if others could not work at home, they could at least work at his home.

In The Disappearing City, Wright’s 1932 treatise on his ideal metropolis, the architect tried to immolate the concept of the industrial city on a pyre of adjectives. The modern city was a centripetal force spinning beyond control, operating at a scale and pace that could only be inhuman. The commuter, who spent his time in a senseless, ceaseless to-and-fro between office and home, was a victim of the age. Barely living, he had instead developed an “animal fear of being turned out of the hole into which he has been accustomed to crawl only to crawl out again tomorrow morning.” Only in Broadacre, or somewhere like it, could this time and energy be recovered, and expended on the “new individual centralization—the only one that is a real necessity, or a great luxury or a great human asset—his diversified modern Home.” The offices of the “professional” would be merely an add-on, an optional feature.

Wright’s reasoning was partly aesthetic—he linked the confounding disorder of irregular city planning to an ugliness of spirit (in figures like H.P. Lovecraft, this distaste was also highly racialized). The rationale was also partly political. Offices and factories, where necessary, would be linked by “volatilized, instantaneous intercommunication,” but the home would be a sanctuary for Broadacre Man, Wright wrote, “less of his energy consumed in the vain scramble in and scramble out.” This mode of labor was truest to a democratic society, and while Wright’s vision was materially futuristic, it was also a return to the Jeffersonian ideal of the semi-pastoral citizen. Gentlemen, if they worked at all, had once worked at home. (The U.S. president still did.) Early clerks’ offices had fireplaces and lounge chairs to try to replicate home comforts. In the preindustrial world, piecework systems for industries like textile manufacturing made the homestead the center of much working-class labor as well. Early fantasies of remote work looked backward as well as forward.

Wright’s hatred of commuting seems excessive today, and some of it rests on eccentric concerns. Still, his fear that modern office life would corrode personal freedom was borne out. In the average twenty-first–century white-collar workplace, employees perform their tasks from cubicles, in open-plan offices. They attend meetings (or prepare for meetings) 12 hours a week, according to some estimates. They receive approximately 121 work-related emails per day. These activities are at the heart of a working week that is 44 hours long. Each has been shown, in some cases by more than a century of scientific inquiry, to be distracting, inefficient, and stress-inducing. None are conducive to productivity, and yet each has become entrenched as a default.

These components were not invented for these purposes, and their inventors sometimes watched their repurposing with dismay. The Bürolandschaft (“office landscape”) concept that presaged the modern open-plan office was conceived as anti-hierarchical and organic. (Remnants of these ideals survive in the design choices of certain tech firms: Mark Zuckerberg, for instance, works at the same featureless white desk that his employees use.) When the American firm Herman Miller adapted Bürolandschaft into a modular cubicle system called Action Office, white noise machines were supposed to provide the privacy that had been eliminated. They were seldom installed.

Instead, Action Office was used to maximize floor space. Its chief designer, Robert Propst, had linked openness of design and openness of feeling, imagining a shared space would break the fiefdom of the corner office. In its place, Propst thought, egalitarianism and creative discourse among co-workers would reign. Instead, employers crammed their workers into “cubicle farms” as though they were battery hens. In the 1970s, the average American office building allowed for 500 to 700 square feet per employee; by 2017, the real-world average was about 150. Companies are run “by crass people who can take the same kind of equipment and create hellholes,” Propst told Metropolis magazine in 1998.

Open-plan offices are also preferred surveillance environments. Once, only the most lowly white-collar workers, such as secretaries in typing pools, would share communal spaces. That was before Taylorism tried to wring every drop of productivity from employees, down to how long they spent swiveling in their chairs, efficiencies that required intense managerial scrutiny. The inability to watch over workers was identified very early as an impediment to working from home. In the early 1970s, Don Frifield, a fan of the Nilles approach, argued that what he called “the Great Office Building” was “a kind of latter-day baronial castle,” where most of the peons were engaged in busywork anyway. The U.S. Department of Commerce summarized his views in a report: Frifield “feels the modern office is determined by the belief of management that people who work have to be watched all the time, but the fact is no one can watch white collar workers anyway. Likens present work supervision patterns to the extension of feudal patterns.” Even this wry pessimism did not predict quite how far the “barons” would extend their rule into the home.

Objections to this intrusion may have stalled some of the technologies that remote work would later come to rely on. Machines that could be used to surveil remote work existed much earlier than we remember. The first crude cameraphone transmitted images in 1927; by the 1960s, AT&T previewed a fully functioning landline videophone, purveying live imaging two ways. This Picturephone, rolled out commercially in the 1970s, was a colossal flop. “It wasn’t entirely clear that people wanted to be seen on a telephone,” the firm’s corporate historian concluded, though now we disprove his speculation daily. Along with its high price, the Picturephone’s positioning as a work tool doomed it. Customers were happy to see family and friends as they talked; in the 1970s, inviting a voyeuristic boss into the home felt creepy.

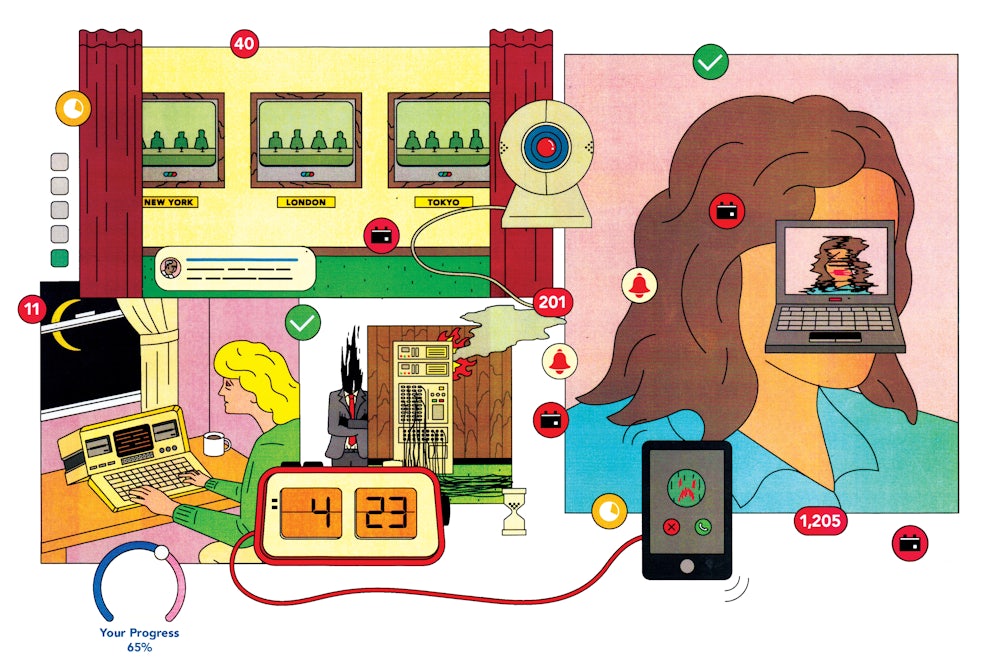

By 2020, management had little compunction about stepping over the threshold, and surveillance is now almost standard, within offices as well as outside them. In May 2019, the research and advisory company Gartner found that 50 percent of companies surveyed were using “nontraditional monitoring” of employees, including analyzing email text, scrutinizing inter-personnel meetings, and collecting biometric data. As working from home became mandatory, firms panic-bought spying software, including key-loggers, screenshots, access to browser history, and details about the number of emails sent. Time Doctor, one of the most popular surveillance modules, includes a Distraction Alert, which notifies the employee (and supervisors) if visits to personal websites or periods of inactivity are detected. One corrective pop-up message reads: “Hi. It seems kind of quiet. Are you still working? Clicking or typing anywhere means you’re working.”

Are you still working? As the barriers between private, personal, and working life dissolve or blur, that question is sometimes hard to answer. Uncanny Valley, Anna Wiener’s memoir of a shortish career in Silicon Valley, is also frequently a memoir of a life lived on this disputed borderland between labor and nonlabor. Colleague as overseer, self as overseer—these roles have become near-mundane. While teleconferencing, Wiener found that she “liked the specific intimacy of video: everyone breathing, sniffling, chewing gum … watching everyone watch themselves while we pretended to watch one another, an act of infinite surveillance.” When she did head in to the office, the vibe was homey, one of many strange transpositions between industry and leisure. Someone there always seemed to be shoeless, or strumming a guitar, or mixing cocktails. People had to go home to get work done.

Some skeptics of remote work have highlighted an unusual feature of post-Covid conditions. There is a new distinction among co-workers: Some came to know one another in shared offices, and formed their co-working styles cheek by jowl. New hires, who have never bonded with their colleagues in person, do not operate so smoothly. This knowing-without-knowing was presaged in one of the very earliest depictions the computer-linked life, E.M. Forster’s short story “The Machine Stops,” in which human beings communicate through a giant machine, and find their social skills atrophying. “The Machine did not transmit nuances of expression,” one character notices. It gives only a “general idea of people,” while ignoring an “imponderable bloom” that is the “actual essence of intercourse.”

For Wiener and those like her, socializing started to take on this ersatz quality as well. The intra-office chat rooms of her unnamed company were plastered with cartoonish emojis, dedicated to hobbies as well internal business communications, and thus constantly permeating the membrane between business and pleasure. The day’s true work consisted of “toggling between tabs,” and, at the end of that day, Wiener the teleworker found herself thinking: “Oh right—a body.” Departing her company after more than three years, she got no personal farewell, enjoyed no secular ceremony of leaving the office holding a box of belongings and a plant. “I sat on my bed with my laptop,” she writes, “watching my access to internal platforms be revoked, one by one.… A whole world, zippered up.” One colleague sent a message via the company’s internal messaging: “I didn’t know you worked here.”

Did she work there? The phrase “he who is absent is wrong” may have originated in diplomacy (it is sometimes ascribed to Talleyrand), but it also captures an essential, visceral component of being present in an office—it’s no coincidence we go to work to “perform a role.” Throughout telework’s troubled history, this inverse relationship between physical remoteness and proximity to power has perhaps proved most determinative. No matter how smooth and crystalline the images broadcast from home are, they cannot convey a body through distance and into space. Teleworkers are less likely to be promoted, and in companies with significant work-from-home cohorts, the absent employees sometimes feel like an afterthought.

When Wiener’s company verged on unionizing, one dissatisfied remote worker even asked for a coffee allowance. “I work from a coffee shop,” she said, “I have to buy something when I’m there, and I don’t even drink coffee.” In the book, this is played for laughs—none of the requests went anywhere—but it leaves a disquieting impression. Can remote workers unionize? Can they strike, or negotiate collectively over conditions? The atomization of teleworkers, their loneliness as employees, seem at odds with articulating a shared interest.

Physical proximity is not a precondition for solidarity—era-defining anti-racism protests have sprung from conditions of enforced isolation, after all—but it is more difficult to make common cause at long distance. Likewise, the impromptu social interactions that make work more palatable also make it more creative and productive. Google campuses (and the workplaces like them) are partly so hedonic to encourage employees to stay at work longer. The volleyball and music rooms, even the length of the lines in the cafeteria, are all carefully designed to promote “serendipitous interaction”—the chance, extra-boardroom meetings where ideas may better percolate.

And what is the fate of the coffee shop? It had become a drop-in workplace, paying a tech company’s overheads for the margin on a latte. “What to Do When Laptops and Silence Take Over Your Cafe?” The New York Times asked in 2018, quoting a Sunset Boulevard café owner who said that “three hours for five dollars’ worth of coffee is not a model that works.” These establishments are everywhere from Brooklyn to Chiang Mai, and look uncannily like post-cubicle open-plan offices, except none of the “colleagues” talk to each other or interact. Such de facto co-working spaces, which achieve a weird, globalized stylistic homogeneity (blonde wood, Edison light bulbs, seltzer on tap), are spontaneous and self-organizing, workhouses for the bourgeoisie in which no foreman is needed to ensure quietude.

That’s quite a change, when coffeehouses spent centuries as places for talk, play, and creativity. In the twentieth century, they were so central to cultural production that a single establishment in Zurich, the Café Odeon, could play a role in the incubation of Modernism, Leninism, Dadaism, and the theory of general relativity. Now these spaces are stilled and sterile. Panera Bread may never have lived up to that billing, though the more humble interactions it housed are still worthy of protection if they can be saved, and mourning if they can’t be. Newer libraries have remodeled on these lines, and anywhere near a college is similarly vulnerable to hostile takeover.

The early champions of remote work had been trying to insulate the middle class from the totalizing tendencies of capitalism, to protect their time and space, as well as natural resources. But the Nilles experiments stumbled and then stalled. California tried a state-assisted string of teleworking hubs in the 1990s that withered once subsidies were withdrawn. The promise of increased leisure was still dangled—Timothy Ferriss’s book The 4-Hour Work Week: Escape the 9–5, Live Anywhere, and Join the New Rich preached geo-arbitrage and work conducted from cheap, warm countries with good internet—but most often, an extra four hours was simply added on top of the 40-hour week, without a tropical locale to ease the transition.

The result was—is—a “homework economy” where work emails announce themselves to be answered at home, on the weekend, and in the evening. Had these futurists paid more attention to the women in their lives (if there were any), they may have been less mistaken. Remote work took a path similar to housework: By the middle of the twentieth century, household chores were revolutionized by affordable electrical labor-saving devices. Yet, by some measures, the housewives of the postindustrial age did more housework than their preindustrial equivalents. Vacuum cleaners, washing machines, sewing machines, and electric irons displaced the men, children, and servants who had previously helped with work in the home. Shopping, motorcar errands, and increased standards of cleanliness ate away the rest of the time that should have been freed up. As Ruth Schwartz Cowan established in her classic study of housework, More Work for Mother, “modern labor-saving devices eliminated drudgery, not labor.” Expectations changed, and so did standards.

A similarly ironic fate met remote work advocates’ drive to “decentralize.” Inherent in the idea was a diminishment of the city, a reduced emphasis of its importance, and critically, a decrease in its size. Some cities did shrink across America, Europe, and Japan, simply not for the reasons the corporate pastoralists imagined. They were diminished by urban blight and deindustrialization more than controlled decline. In the United States, retirees and families fled for the suburbs or the hinterlands, while young professionals departed for a few financial capitals. The ascendancy of online stores ate into retail strips, and left malls pockmarked or abandoned. Prior to the pandemic, dating apps and smoking bans did something similar for nightclubs and bars: By 2014, they were closing at the rate of six per day.

Telework has been proffered more often as a means of restoring cities than killing them off. Remote work can, it is claimed, rebalance the lopsided emphasis on the coasts, and reverse some of the necrosis in America’s heartland. Internal migration is, after all, a form of commuting, and perhaps brain drain could be reversed by making the brains themselves more mobile. That way, attractive talent could live cheaply, stay close to family and friends, and, in time, refresh economically fallow areas of the country. Some localities have made special legislative attempts to promote these activities. Maryland, Delaware, and Virginia, home to many long-haul commuting government workers, had double incentives: They can save taxes if fewer public workers no longer require offices.

But the world, not merely the corporation, needs “serendipitous interaction.” It is indispensable to how we choose what to eat and what to wear, to fashion, love, friendship, culture—all that is most valuable and lasting. And all of these spheres of life draw their energy from the tidal movement of cities, movements set by commutes. Frank Lloyd Wright obsessed over the unnatural time-tabling of these diurnal movements, how they confounded the ancient influence of the sun on life. But it was electric light, not the subway or motorcar, that made this influence wane. Shared circumstances can feel communal, not only something that stifles or dehumanizes.

“Not working is maddening,” Ling Ma’s narrator thinks in her prescient 2018 novel, Severance. “The hours pass and pass and pass. Your mind goes into free fall, untethered from a routine.” Ma’s satire takes place in a disease-struck version of New York City; the afflicting malady, called Shen Fever, originates in China and is combated with N95 masks. As the city’s living population thins, the protagonist keeps showing up to her job until she is alone in her workplace, seeing out her stipulated contract period for managers who no longer exist. “I was there to work,” she reasons, “so I would just work.” As her duties perish, she revives a photography blog, documenting the city as it becomes ghostly. She herself is a kind of specter, tethering herself to her former life with her redundant routines. “To live in a city is to take part in and to propagate its impossible systems,” she decides. “To wake up. To go to work in the morning.”

This is not satire—or it is not only satire. When our cities emptied, workers tried to preserve these impossible systems, these schedules and rhythms, even when doing so required affectation. Anna Cox, a professor of human-computer interaction at University College London, told Londoners to engage in a “pretend commute,” which “not only provides an opportunity to build some physical activity into your daily routine,” but also helps you “transition between work and non-work.” Microsoft Teams updated its workplace collaboration software to include a “virtual commute”—a feature, The Wall Street Journal reported, designed to help people mark the start and, crucially, the end of their working day. Perhaps managers were not persuaded that the addition was an improvement; shortly afterward, the entire Microsoft 365 platform introduced productivity analytics. These monitor employees at an individual level, once their virtual commute has reached its destination.