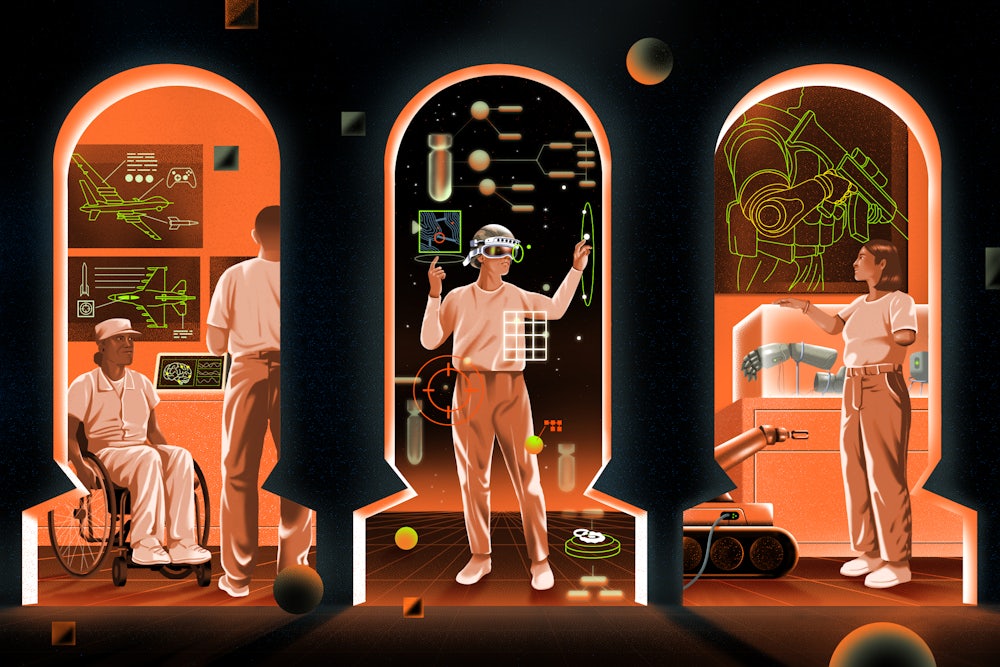

In 2015, the Defense Advanced Research Projects Agency, or DARPA, which is often referred to as the Department of Defense’s “mad science division,” adapted a flight simulator so a woman named Jan Scheuermann, who is paralyzed due to a neurodegenerative condition, could fly an F-35 fighter jet using only her mind—and the probes they implanted in her motor cortex. Scheuermann is a self-described “cutting-edge brain-computer interface lab rat” for DARPA’s Revolutionizing Prosthetics program, which in 2006 began “to expand prosthetic arm options for today’s wounded warriors.”

The Revolutionizing Prosthetics program consisted of two separate projects: the LUKE arm system, which, at $250,000, carries the distinction of being “the world’s most expensive prosthetic arm,” and the Modular Prosthetic Limb, which was “designed primarily as a research tool.” For a prosthetic with an unknown life span, it is not surprising LUKE hasn’t gained much adoption even from the self-pay amputee elite. Such experimental equipment is decades away from acceptance in a draconian insurance system that has demonstrated intractable commitment to gatekeeping technological advancements from most disabled people until they are no longer effective, relevant, or desirable.

But once researchers realized they could detach the LUKE arm from its human operator, they began working on a new plan to integrate “militarized versions of these terminal devices onto mobile-robotics platforms, making them efficient enough that human operators won’t have to go into the hazardous zones themselves.” Years on, that is where this technology has found its use: in “small robotic systems used by the military to manipulate unexploded ordnance.”

The “dual-use nature” of DARPA’s Revolutionizing Prosthetics technologies, which purport to create access for a disabled person but then find latent real-world use as a weapon of war, exemplify an unacknowledged outcome of inclusive design’s “solve for one, expand to many” mandate. When assistive technologies are expanded to police, surveil, maim, and kill before materializing the human-centered solution that inspired their invention, it is worth considering the morality of bringing disabled people into a war apparatus known to produce and perpetuate disability—and death.

The story of how we got here is a tangled one, one that tracks back over a century of history. But we may as well begin with Microsoft, which, despite its well-documented toxic culture, has long believed itself to be uniquely equipped to “provide Corporate America with a blueprint” for disability inclusion best practices. “In 2015, Microsoft published an inclusive design tool kit that has since become a bible for inclusive design. The tool kit has been downloaded more than 2 million times’’ and has been used to shape such products as its HoloLens augmented reality/virtual reality headset, which was announced that same year.

Not long after the Microsoft HoloLens Development Edition was made available, the Israel Defense Forces tweeted it was “using the … Hololens to bring augmented reality to the battlefield.” Microsoft’s subsequent $22 billion contract with the Department of Defense to supply the U.S. Army with more than 120,000 headsets extended the disabled use case to “the killer use case.”

Whatever might have motivated the creation of the Microsoft Inclusive Design Toolkit, in practice, it transformed inclusive design into little more than a referent neologism, used to signal disability inclusion without actually having to do any of the restructuring required to actually design and engineer accessible systems. The impact of this shift in focus doesn’t just fall on the disabled tester, who rarely benefits from their contribution to the development of these technologies. Instead, those tasked with this labor often have no idea where their involvement will ultimately lead. “We did not sign up to develop weapons, and we demand a say in how our work is used,” reads an open letter that dozens of Microsoft employees signed, in response to the DOD deal, because they were “alarmed that Microsoft is working to provide weapons technology to the U.S. military, helping one country’s government ‘increase lethality’ using tools we built.”

The 2019 deal was a follow-up to the initial phase of the project, during which Microsoft supplied DARPA’s Next-Generation Nonsurgical Neurotechnology, or N3, program with the HoloLens so the agency could create “brain interfaces for able-bodied warfighters.” Up until this point, DARPA had claimed it was “focused on technologies for warfighters who have returned home with disabilities of the body or brain.” But suddenly, DARPA’s focus had shifted away from disabled vets, and N3 was now using “cooking as a proxy for unfamiliar, more complex tasks, such as battlefield medical procedures, military equipment sustainment, and co-piloting aircraft,” to develop the HoloLens for war.

In 2023, Microsoft addended its Inclusive Design Toolkit with an Inclusive Design for Cognition Guidebook that somehow also managed to propose cooking as a method to identify “what cognitive demands are being asked of the user.” This means it’s not just N3 as the program, Inclusive Design as the methodology, and HoloLens as the technology. The way developers used cooking—a common household task—as their use-case exemplar fairly explicitly put disability, writ large, at the heart of their work: an unimpeachably “feel-good” north star to follow.

This was no accident: Here, the illuminated connections between disability and warfare run deep—in well over a century of history, including the global conflicts that shaped our world. Cooking as a measure of cognition has roots in occupational therapy, a rehabilitative health care practice that was legitimized during World War I, when that era’s new weapons of war—such as poison gas and flamethrowers—didn’t necessarily kill soldiers but sent them home permanently disabled. At the time, O.T. broke down leisure activities into cognitive, motor, and neurological components that could be rehabilitated under the supervision of health care practitioners.

By the 1970s, the focus on leisure activities shifted, and occupational therapists started focusing on the “activities of daily living” that people would encounter in their lives. A small room in one of the formative occupational therapy clinics, which was housed at Johns Hopkins University, was converted into a kitchen where patients could prepare and cook food. According to Kathryn Kaufman, manager of inpatient therapy services in the Johns Hopkins Department of Physical Medicine and Rehabilitation, “We got away from the task-based activities to occupy a person’s time and started integrating interventions to address the areas that patients needed to get back to their lives.”

Those task-based activities derived from the Arts and Crafts movement, which conveniently brought resolution to a conundrum that asylums were facing at the turn of the twentieth century. The Enlightenment’s “moral treatment” approach to therapy tasked public patients with maintaining the asylum grounds because “work was believed to help patients develop self-control and boost their self-esteem.” These institutions couldn’t possibly compel private patients to engage in the labor of maintaining the facilities they were paying for, so curative workshops that housed “crafts such as weaving, carpentry, and basketry were introduced into asylums” for wealthy patients.

Curative workshops laid the foundation for sheltered workshops to employ blind workers who “were principally engaged in broom-making, with chair-caning and hand weaving the other two main activities.” This was the culmination of a shift that began, again, in the aftermath of war. Where these health care practices sprang up, in part, to care for those soldiers extracted from the battlefield with long-term disabilities to live with, they provided the means to seamlessly push the disabled noncombatants into the war-fighting fold. Hundreds of blind workers transitioned to employment in war factories when “the first sizable opportunity for blind people with good skills to move out of the workshops came during World War I.”

Legislation that “afforded qualifying nonprofit agencies for the blind an opportunity to sell to the Government” soon followed. The Wagner-O’Day Act was signed into law on June 25, 1938—coincidentally, the same day as the passage of the Fair Labor Standards Act, section 14(c) of which contained a provision exempting sheltered workshops from minimum-wage laws.

In 1971, Senator Jacob K. Javits amended the original Wagner-O’Day Act to “include people with significant disabilities.” It also established an independent federal agency, later named AbilityOne, to administrate the mandatory source of supply for the federal government that was awarded to qualifying workshops on a noncompetitive basis.

AbilityOne has adopted the identity of the DOD, which is its largest contractor, by posturing as “an economic citadel” and “a fortress of opportunity.” Harkening back to World War I, AbilityOne and its network of affiliates conflate the employment of intellectually and developmentally disabled people, as well as blind people, with patriotism, using taglines such as, “Serving those who serve our nation.” One organization in particular, LCI, which bills itself as “the largest employer of people who are blind in the world,” acquired Tactical Assault Gear, or TAG, “a worldwide best in class” tactical assault gear marketplace, in 2010. Like many AbilityOne organizations, the TAG Instagram page is filled with images that are inexplicably inaccessible to those it purports to champion, instead incorporating propaganda, such as “working hard for those who continually shoulder the hard work,” into its captions.

The passage of the Americans With Disabilities Act in 1990 brought new teeth to the President’s Committee on Employment of People with Disabilities, or PCEPD, which can be traced back to a Truman-era joint resolution calling for an annual observance of “National Employ the Physically Handicapped Week.” During a 1992 Americans With Disabilities Act Summit, which “brought together leaders in business, labor, government and the disability community,” the committee hosted a PCEPD Annual Awards to honor “employers that have developed exemplary approaches” to ADA compliance. These awards planted the seed for a genre of inclusion that shields the very corporations to which the New Deal supplied disabled labor.

In 1994, the Business Leadership Network was established through the PCEPD under the “premise that business responds to their peers.” In 2018 it was rebranded as Disability:IN, a nonprofit organization that advocates for corporate disability inclusion. Disability:IN is best known for its disability equality index, or DEI, “an annual transparent benchmarking tool that gives U.S. businesses an objective score on their disability inclusion policies and practices,” which is created in partnership with AAPD.

Despite claims of transparency, obfuscation scaffolds Disability:IN’s practices. Its website is peppered with language that describes how it “empower[s] business to achieve disability inclusion and equality,” but isn’t forthcoming about what “disability inclusion” applies to. By failing to clarify that its brand of “disability inclusion” only pertains to hiring and employment practices, Disability:IN allows its corporate partners to do such things as create disability under the guise of disability inclusion.

Here’s why this skullduggery matters: In 2023, eight of the top 10 defense contractors in the United States scored 100 out of a possible 100 on the DEI. Surprisingly, Raytheon’s DEI score dropped to 90, though it had scored 100 out of 100 since 2019. Given how other arms suppliers thrive on the DEI, it is obvious that the 10-point drop in score was in no way a reflection of Raytheon’s portfolio of products that maim and kill innocent civilians. Instead, this drop was most likely a mutually agreed-upon slap on the wrist after Raytheon was sued by a disabled former employee for allegedly failing to pay required long-term disability benefits.

In this way, a practice that began in the Arts and Crafts movement’s efforts to address the plight of factory workers and the declining quality of industrialized goods somehow managed to spawn something much more cynical: a genre of inclusion that hires disabled employees to create products that both disable and disproportionately harm disabled populations.

In 2015, a DARPA-hosted competition tasked participants with developing “robots capable of assisting humans in responding to natural and man-made disasters.” Afterward, a robotics team made up of faculty, engineers, and students from WPI and Carnegie Mellon University summarized their “observations and lessons learned.” Their key takeaway: Despite entering the competition “believing that robots were the issue, we now believe it is the human operators that are the real issue.”

Engineers were now tasked with homing in on a use case that could address human error. Soon after, it occurred to computer scientists Reuth Mirsky and Peter Stone that “a seeing-eye dog is not just trained to obey, but also to intelligently disobey and even insist on a different course of action if it is given an unsafe command from its handler.” It is through this logic that seeing-eye dogs became “fertile research grounds” for A.I. in robotics.

In 2021, Mirsky and Stone proposed a Seeing-Eye Robot Grand Challenge “as a thought-provoking use-case that helps identify the challenges to be faced when creating behaviors for robot assistants in general.” They described blindness as an inspirational manifestation of the technological, ethical, and social challenges robotics needs to overcome, in order to “design an autonomous care system that is able to make decisions as a knowledgeable extension of its handler.”

The Seeing-Eye Robot Grand Challenge has so successfully captured the pedagogical imagination that robot guide dogs are now being engineered in university labs all over the world. Most of these labs use a Boston Dynamics’ Agile Mobile Robot named Spot as their starting point, and when its distinct yellow shell appears under a headline about one of these robot guide dogs, audiences respond positively. But when the exact same Boston Dynamics model appears under a headline about policing, it arouses fear. This is the real uncanny valley; not some ableist revulsion to prosthetics, as proposed by roboticist Masahiro Mori, but rather an absence of skepticism when burgeoning technologies are intended for “the less fortunate.”

And these boundless promises are backed up by dubious data. After taking up the challenge in his lab, SUNY Binghamton assistant professor Shiqi Zhang expressed being “surprised that throughout the visually impaired and blind communities, so few of them are able to use a real seeing-eye dog for their whole life. We checked the statistics, and only about 2% of blind people work with guide dogs [and it] costs over $50,000.”

But the otherwise heavily resourced request for proposals does not offer any attribution for these figures; it is a phantom statistic that has nevertheless acquired no small measure of ubiquity. Past articles and resources have cited the nonprofit Guiding Eyes for the Blind for the 2 percent statistic, but the Guiding Eyes website doesn’t claim authorship of it; it instead describes it as a “frequently cited statistic.” (When made aware of how its website statistic was being used, Guiding Eyes remarked that guide dog partnerships are far too complicated to be distilled into an overly simplistic number.)

Why make note of this? Dubious information with no discernible origin creates an effective scaffold for lofty claims about untapped markets and the potential to serve clients who have been ignored. Here, an innovator establishes value by implying a means of savings for the unnecessary costs they have identified. For disabled people, those unnecessary costs can put a price tag on our lives. The cost-benefit analysis of disability is a vestige of the early eugenics movement, where public flyers in Nazi Germany showed, for instance, that one disabled person could cost as much as an entire family, as documented by Dr. Aparna Nair, a disability historian who lives with epilepsy.

In the decades after World War II, ideas founded in overt eugenics fell out of favor, but the focus on disability as an economic burden persisted. The notion that there is an acceptable price for life has become baked into research justifications, because any reduction in cost, even if not real, is considered a public good.

The Seeing-Eye Robot Grand Challenge justifies the urgency of its innovations by claiming a living, furry seeing-eye dog can cost $50,000. Not only do stakeholders avoid any clear theory detailing how guide dog robots will effectively reduce this cost, they deftly avoid mentioning the $75,000 price tag for each Boston Dynamics base model they attempt to engineer into a suitable replacement. This indirect disingenuousness is mirrored in heartwarming headlines about the charitable service of robo-hounds, which obscure the reality of their deployment as public servants—of the state. Just like robot police dogs, robot guide dogs are primed to patrol our most vulnerable citizens, and this context is vital when considering the cost, not of disabled people, but to disabled people.

Recent headlines continue to illuminate the way this technology is seamlessly bypassing disabled people for the battlefield. Mirsky has joined a consortium of Israeli robotics companies and academic organizations that work together “to jointly develop human-robot interaction capabilities.” It was recently reported that one of the consortium start-ups, Robotican, is treating Gaza as a “testing ground for military robots.” It is unknown how Mirsky’s flagship “guide robot that will assist people with visual impairments” will accelerate militarized support for authoritarian oppression through technologies of surveillance and destruction. But her stated goal for researchers to investigate “among other things, how autonomous a robot can be, the extent to which it can make decisions and perform actions without human intervention,” reifies designers’ attitudes about the passive role to which disabled people are relegated in the development of experimental technologies.

And yet, powerful systems create inward, centripetal forces that pull in disabled subjects to actively develop these technologies as well. Microsoft, for example, recruits disabled subjects to “co-create solutions with us” because, they say, “co-creating and designing with careful observation and empathetic conversations with individuals and groups is key to understanding someone’s behavior.” These exercises in “co-creation” often take the form of workshops or hackathons, which inform Microsoft’s suite of inclusive design products. Once again, however, these products risk finding their way into the war machine.

One such example “started out as two hackathon projects at the company after an idea from a veteran with limited mobility,” and was eventually featured in “Reindeer Games,” Microsoft’s official 2018 holiday ad, featuring a disabled boy gaming with his Xbox Adaptive Controller, or XAC. Microsoft’s decision to market this disabled gamer–led technology as a child’s toy transformed its under-resourced co-creators into secondary users.

Once the product comes to market, the aforementioned co-creators get flung from the powerful system that now bears the name of their contribution. And as evidenced by an October 31, 2023, statement from AbleGamers, the organization that worked with Microsoft for two years on the XAC, co-creators aren’t privy to future decisions about how their ideas will ultimately be expanded. “The truth is, we do not have any inside information, unfortunately our partners at Xbox did not reach out to us as they have done in the past, and we learned about this like everyone else did.”

Soon after, a Microsoft manager clarified that the XAC will be exempt from a new and restrictive third-party app policy that will reap “a probable increase in sales of their own controllers and accessories,” thus granting us cause to speculate. What if the only financially viable future for the Xbox Adaptive Controller is found not as a gaming device but as a weapon of war? Just as Ablegamers brought a decade-plus of experience with it to its engagement with Microsoft, there are organizations that would be similarly attractive to the multinational weapons supplier, were it to expand another of its inclusive-designed products to death.

Notably, Wounded Eagle UAS uses its patent-pending “revolutionary Drone Assisted Recovery Therapy,” or DART, to “provide comprehensive training and rehabilitation to our veterans, equipping them to become skilled commercial [small Unmanned Aerial Vehicle or System] operators.” It’s likely it would have some interesting ideas were Microsoft to show interest in adapting its Xbox Adaptive Controller.

This is not some stretch of the imagination: Xbox controllers are already being used by multiple militaries; the U.S. Navy’s USS Colorado is “the first attack submarine where sailors use an Xbox controller to maneuver the photonics masts.” There’s also the British tele-operated Polaris MRZR, which uses an adapted Xbox game console controller. The Israel Defense Forces decided to use the “friendly and familiar Microsoft Xbox controller” to create a “specific user experience” for their young active-duty troops. “Any teen or 20-something who enters the hatch of the Carmel will likely feel familiar with the environment, thanks to video games.” An Xbox Adaptive Drone Controller is a real possibility that risks burdening well-meaning engineers whose efforts to make gaming more accessible are instead used to disable innocent civilians on faraway battlefields and concentration camps.

In 2016, two University of Washington undergraduates won a $10,000 Lemelson-MIT student prize for a pair of gloves they engineered to translate sign language into text or speech. A video of the two students demonstrating their gloves was uploaded to the internet and continues to resurface regularly nearly a decade later. Its serial virality is sustained by a public lust for technologies that melt disability away into a capitalist cyber euphoria.

It is this fervor for the feel-good that tethers two incompatible audiences to one another; the blissfully ignorant intended viewer who is inspired by the saccharine story of how technology is changing the world—surely for the better!—and the nonconsenting deaf and disabled folk who invariably respond before considering what it would wreak if engineers were to take their valid critiques up as a challenge. In the case of these gloves, culturally competent commenters consistently deride the failure to recognize the sophistication that’s already inherent to American Sign Language, specifically with regard to the role of facial expressions in conveying linguistic information and emotions. Is the surveillance of facial expressions really something disabled people want to contribute to the technological development of?

This is the lesson: When a new technology crops up, seemingly out of nowhere, that is a signal to dig deeper—because it came from somewhere, and lately it’s been seeming that the more nefarious the purpose, the more benevolent the origins.

For example, the University Of Illinois Urbana-Champaign has received unprecedented cross-industry support from Amazon, Apple, Google, Meta, and Microsoft for its Speech Accessibility Project to “build stronger, more adept machine learning models so as to better understand more diverse speech patterns.” Nobody seems to be asking why these four competing titans of tech would choose to come together on this specific one-of-a-kind collaboration, when competition and litigiousness characterize nearly all of their other interactions.

Instead, the project website claims their alliance is “rooted in the belief that inclusive speech recognition should be a universal experience. Working together on the Speech Accessibility Project is a way to provide the best and most expedient path to inclusive speech recognition solutions.” What they don’t note is how “inclusive speech recognition” will also make it possible to discern speech under unusual conditions. What is the likelihood, then, that the Speech Accessibility Project will find itself first serving a surveillance state that’s already laser-focused on disproportionately criminalized populations?

There is a broad body of reporting spanning decades that highlights how advancements in assistive tech always seem to spike during times of war, and it has thus far failed to recognize the wheelchair-to-warfare pipeline that has resulted from these innovations. When a massive corporation announces the next one or the next, a question to ask might be, who will this ultimately benefit? It also might be useful to ask what the evolutionary effects could be. Because the armed conflicts that defined the twentieth century have given way to the forever wars that have captured the twenty-first—a gaping maw of military misadventure, begging to be fed with the fruits of our invention.

When inclusive design’s “solve for one, expand to many” mandate is taken to its logical conclusion, it will inevitably harm the people it claims to center. And that’s because inclusive design is not a design methodology. It is a marketing mechanism obscuring how disability back-doors injurious advancements in tech. Something truly cynical, perhaps even sinister, is wrought when the most innovative assistive technology isn’t being put to the purpose of “rehabilitating” bodies but, rather, the act of war itself.