Every day, a Twitter account run by Kevin Roose, a technology reporter for The New York Times, posts a

list of the top 10 sources of the highest-performing links on Facebook in the United States.

On one Friday in November, the list began with CNN. The eighth and ninth entries were NPR. But the seven others were from Donald J. Trump, evangelical Trump supporter Franklin Graham, and conspiracy theorist Dan Bongino. Indeed, Bongino, once largely unknown outside the world of right-wing Facebook groups and obscure video sites, owned four of the top 10 spots. On many days Bongino, Trump, Breitbart, Newsmax, and Fox News dominate the list. Occasionally, The New York Times, Senator Bernie Sanders, or former President Barack Obama will puncture the top 10. But consistently, suspect right-wing sources show up with far greater frequency than legitimate news organizations.

What does this list tell us? Not as much as it might seem. The list obscures far more than it illustrates—despite its popularity among reporters and pundits who regularly cite it to demonstrate a right-wing political bias on Facebook. It’s worse than a rough measure of how the planet’s largest media system handles a flood of politically charged content; it’s a distorting one. That’s because it pushes us to focus on Facebook as a player in a narrow, American, partisan shouting match. And by homing in on this microstory, we miss the real, much more substantial threat that Facebook poses to democracy around the world.

Roose’s top 10 list, along with shoddy, hyperbolic broadsides like the recent Netflix documentary The Social Dilemma, and constant invocations of the odious things Facebook failed to remove but should have, have all pushed the timbre of public debate about Facebook to questions of “content moderation.” Obvious political rooting interests aside, these screeds about unchecked incendiary and extremist content coursing through the site are structurally akin to the steady chorus of Republican whining—orchestrated by bad-faith actors such as GOP Senators Josh Hawley and Ted Cruz—about anecdotal and often fictional claims of Facebook “censoring” conservative content. It ultimately matters very little that this item or that item flowed around Facebook.

There is far more to Facebook than what scrolls through the site’s News Feed in the United States—which becomes readily apparent when you follow the campaign money. As the leaders of the two major U.S. parties criticized Facebook for working against their interests, both campaigns spent heavily on the platform’s advertising system. The Biden campaign spent nearly the same amount on Facebook ads that Trump’s reelection effort did. The Trump campaign repeated the Facebook-first tactics that created a strong advantage in the 2016 cycle, using the platform to rally volunteers, raise money, and identify infrequent voters who might turn out for Trump. So while both parties screamed about Facebook and yelled at Mark Zuckerberg as he sat stoically before congressional committees, they gladly fed the beast. Everyone concedes that the power of Facebook, and the temptation to harness it for the lesser good—or no good at all—is too tempting.

In one sense, this broadly shared political obsession with Facebook—together with other key mega-platforms such as Twitter and Google—was refreshing. For too long policymakers and politicians saw these companies as at worst benign, and all too often as great, American success stories. Criticism was long overdue. Still, the tone and substance of that criticism were unhelpful—even counterproductive.

Panning still further back to assess how Facebook influenced the conjoined causes of decency and democracy around the world, the picture gets darker still. Over the past year, scores of countries with heavy Facebook usage, from Poland to Bolivia, ran major elections—and the results show a deeply anti-democratic trend within the site that goes far beyond partisan complaints seeking to advance this or that body of grievances.

Despite all the noise and pressure from potential regulators, Facebook made only cosmetic changes to its practices and policies that have fostered anti-democratic—often violent—movements for years. Facebook blocked new campaign ads in the week before November 3, but because many states opted for early mail-in voting as they contended with a new spike of Covid-19 infections, the ban kicked in many weeks after Americans had started voting. Facebook executives expanded the company’s staff to vet troublesome posts, but failed to enforce its own policies when Trump and other conservative interests were at stake. Company leaders also appointed a largely symbolic review board without any power to direct Facebook to clean up its service and protect its users. Sure, Trump did not pull off the same Facebook-directed upset he did in 2016, and he lost the popular vote more soundly in 2020—and, unlike the 2016 Clinton campaign, Joe Biden did not allow Trump to flip close states by using targeted Facebook advertisements. But, more than anything Facebook itself did, that was a matter of the Biden campaign playing the Facebook game better.

Regardless, democracy is not about one side winning. Democracy is about one side losing and supporters of that side trusting the result, being satisfied with the process, and remaining willing to play the game again under similar rules. Democracy is also about a citizenry that is willing and able to converse frankly and honestly about the problems it faces, sharing a set of facts and some forums through which informed citizens may deliberate. Clearly, none of that is happening in the United States, and less and less of it is happening around the world. Facebook’s dominance over the global media ecosystem is one reason why.

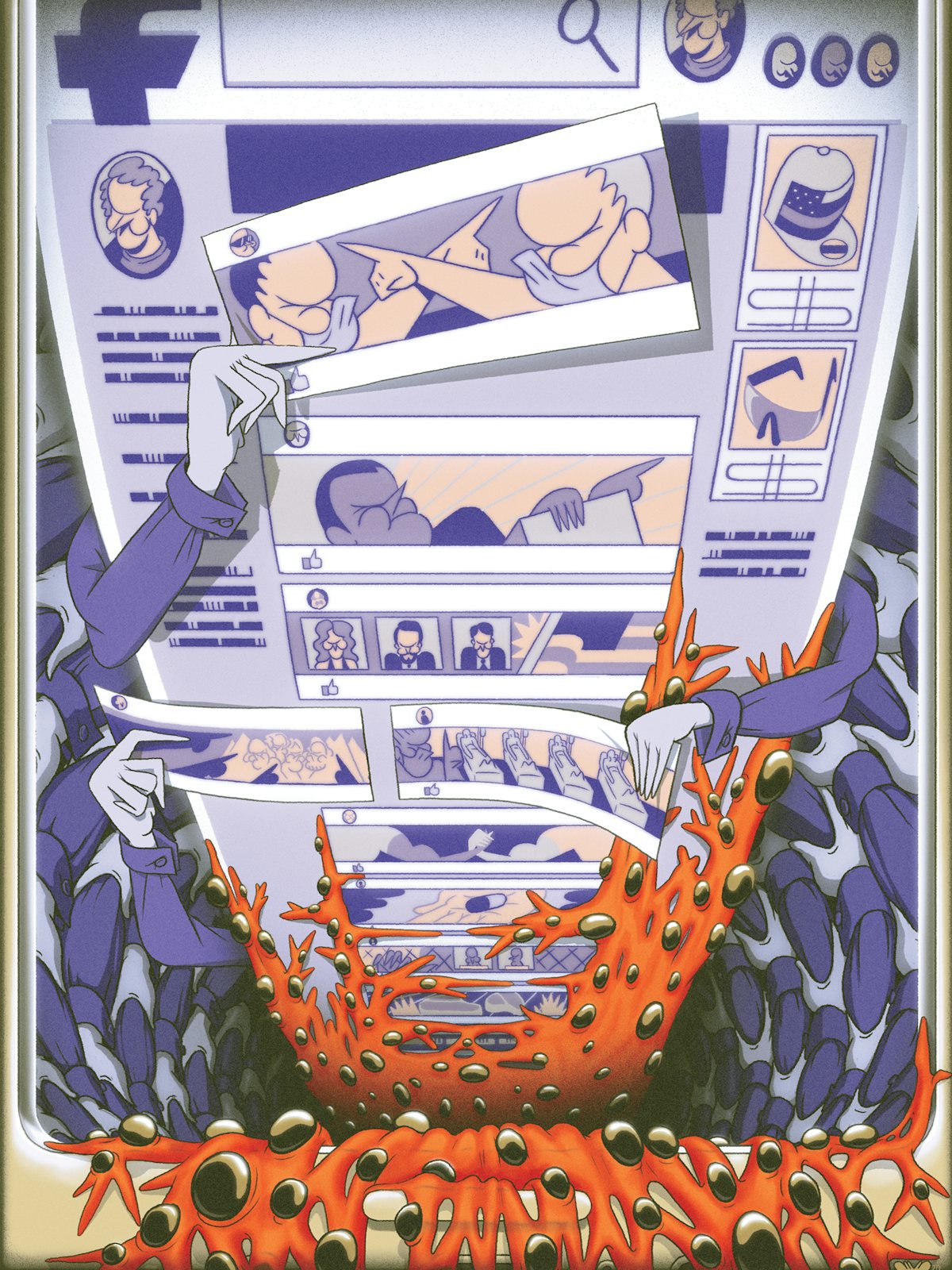

If we want to take seriously the relationship between the global media ecosystem and the fate of democracy, we must think of Facebook as a major—but hardly the only—contributing factor in a torrent of stimuli that flow by us and through us. This torrent is like a giant Facebook News Feed blaring from every speaker and screen on street corners, car dashboards, and mobile phones, demanding our fleeting-yet-shallow attention. Each source and piece of content tugs at us, sorts us, divides us, and distracts us. The commercial imperatives of this system track us and monitor us, rendering us pawns in a billion or so speed-chess games, all half-played, all reckless and sloppy, lacking any sustained thought, resistant by both design and commercial imperative to anything like sustained concentration.

This global ecosystem consists of interlocking parts that work synergistically. Stories, rumors, and claims that flourish on message boards frequented by hackers and trolls flow out to white supremacist or QAnon-dominated Twitter accounts, YouTube channels, and Facebook groups. Sometimes they start on RT (formerly Russia Today) on orders from the Kremlin. Sometimes they end up on RT later. Sometimes they start on Fox News. Sometimes they end up on Fox News later. Sometimes Trump amplifies them with his Twitter account—which is, of course, echoed by his Facebook page, and then by countless other pages run by his supporters. By then, producers at CNN or the BBC might feel compelled to take the item seriously. Then everything churns around again.

Facebook is what Neil Postman would have called a metamedium: a medium that contains all other media—text, images, sounds, videos. It’s improper to ask if Facebook is a publisher or a network, a media company or a tech company. The answer is always “yes.” Facebook is sui generis. Nothing in human history has monitored 2.7 billion people at once. Nothing has had such intimate influence on so many people’s social relations, views, and range of knowledge. Nothing else has ever made it as easy to find like-minded people and urge them toward opinion or action. Facebook may be the greatest tool for motivation we have ever known. It might be the worst threat to deliberation we have ever faced. Democracies need both motivation and deliberation to thrive.

That content cycle—from 8kun to Fox & Friends to Trump and back—and the question of what sort of content does flow or should flow across these interlocking channels of stimuli matter less than the cacophony the content creates over time. Cacophony undermines our collective ability to address complex problems like infectious diseases, climate change, or human migration. We can’t seem to think and talk like grown-ups about the problems we face. It’s too easy and inaccurate to claim that Facebook or any other particular invention caused this problem. But it’s clear that the role Facebook has played in the radical alteration in the global public sphere over the past decade has only accelerated the cacophony—and compounded the damage it creates.

This means that reforming Facebook through internal pressure, commercial incentives, or regulatory crackdowns would not be enough. Breaking Facebook into pieces, creating three dangerous social media services instead of one, would not be enough. We must think more boldly, more radically, about what sort of media ecosystem we need and deserve if we wish for democracy and decency to have a chance in the remainder of the twenty-first century.

The Facebook top 10 list is a perfect example of something that seems to teach us about Facebook and democracy but, in fact, does not. Roose derives the list from CrowdTangle, a Facebook-owned engagement-metrics tool that allows users to track how public content travels across popular Facebook pages, groups, and verified profiles. Importantly, CrowdTangle ignores the growing role of private Facebook groups and any direct messages shared among Facebook users. And the more salient—if decidedly wonky—point here is that this list measures relative prevalence rather than absolute prevalence. “Top 10” is a relative measure. It could be “top 10” out of 450, like the “top 10 tallest players in the NBA.” But the Facebook top 10 list gives no sense of the denominator. A top 10 sampling out of millions or billions might be meaningful, if the reach of the top 10 is significantly greater than the median measure. But given how diverse Facebook users and content sources are, even just in the United States, that’s not likely.

Claiming a position among the top 10 links on Facebook’s News Feed might not mean much if none of the top 10 actually reached many people at all. Given that millions of Facebook posts from millions of sources go up every minute, we can’t assume that any source in the top 10 made any difference at all. The 10 tallest buildings in Washington, D.C., are all very short buildings—in an absolute sense—compared with, say, those in New York City. But they are still relatively tall within D.C.

And, just as important, the prevalence of Facebook sources does not indicate influence. Many people can watch a video of one of Trump’s speeches, yet most of them could reject it, or even share it in order to deride or ridicule it. Others could encounter it and think nothing of it. Propaganda is never simple, unidirectional, or predictable—and most people are not dupes.

There’s also the issue of scale—something that critics of Facebook should never ignore in assessing its impact. Roose’s list simply reflects a moment in time in the United States of America, where only about 230 million of the 2.7 billion Facebook users live. To conclude from it that Facebook favors right-wing content is to ignore the possibility that left-wing content might rule such a list in Mexico or Peru, or that anti-Hindu and anti-Muslim content might dominate such a list in Sri Lanka. It could be all about the Bundesliga, the German soccer league, in Germany. We can’t know. Only Facebook has the rich data that could shed light on both reach and influence of particular sources of content across the vast and diverse population of Facebook users. But of course Facebook is not talking—such information may further damage the company’s reputation as a responsible actor in any number of imperiled democratic polities. And far more consequently, it’s key to the company’s wildly successful business model.

All these bigger-picture concerns allow us to see a larger, more disturbing truth about Facebook than the stateside push for political influence on the site conveys. Facebook—the most pervasive and powerful media system the world has ever seen—does indeed work better for authoritarian, racist, sexist, extremist, anti-democratic, and conspiratorial content and actors. In fact, if you wanted to design a propaganda machine to undermine democracy around the world, you could not make one better than Facebook. Above that, the leadership of Facebook has consistently bent its policies to favor the interests of the powerful around the world. As authoritarian nationalists have risen to power in recent years—often by campaigning through Facebook—Facebook has willingly and actively assisted them.

The question of just what role Facebook could play in the sustenance of democracy first arose in 2011, with the poorly named and poorly understood “Arab Spring” uprisings. At the height of these protests, Facebook received far too much credit for promoting long-simmering resentments and long-building movements across North Africa and the Middle East. Eager to endorse and exploit the myth that a U.S.-based tech colossus was democratizing the new global order with the click of a few million cursors, President Barack Obama and Secretary of State Hillary Clinton embedded technological imperialism into U.S. policy. They aggressively championed the spread of digital technology and social media under the mistaken impression that such forces would empower reformers and resisters of tyranny rather than the tyrants themselves.

Over the ensuing decade, a rising corps of nationalist autocrats such as Vladimir Putin of Russia, Narendra Modi of India, Rodrigo Duterte of the Philippines, Jair Bolsonaro of Brazil, Andrzej Duda of Poland, Ilham Aliyev of Azerbaijan, and the brutal junta that still rules Myanmar, quickly learned to exploit Facebook. The platform’s constant surveillance of users and opportunistic sorting of citizens—to say nothing of its proprietary algorithms that focus attention on the most extreme and salacious content, and its powerful targeted advertising system—have been an indispensable tool kit for the aspiring autocrat. In order to be at the vanguard of a global democratic uprising, Facebook would clearly have to be designed for a species better than ours. It’s as if no one working there in its early years considered the varieties of human cruelty—and the amazing extent to which amplified and focused propaganda might unleash it on entire ethnicities or populations.

Facebook, with its billions of monthly active users posting in more than 100 languages around the world, is biased along two vectors. The first vector is algorithmic amplification of content that generates strong emotions. By design, Facebook amplifies content that is likely to generate strong emotional reactions from users: clicks, shares, “likes,” and comments. The platform engineers these predictions of greatest user engagement by means of advanced machine-learning systems that rely on billions of signals rendered by users around the world over more than a decade. This content could range from cute pictures of babies and golden retrievers to calls for the mass slaughter of entire ethnic groups. The only rule of thumb here is the crude calculation that if it’s hot, it flies. The same rule holds for self-sorting online behavior among Facebook users: The platform nudges them to join groups devoted to particular interests and causes—a feature that also works very much by design, at least since a change ordered by CEO and founder Mark Zuckerberg in 2017. Many of these groups harbor extreme content, such as the dangerous QAnon conspiracy theory, coronavirus misinformation, or explicitly white supremacist and misogynistic content.

The story of our interactions with Facebook—how Facebook affects us and how we affect Facebook—is fascinating and maddeningly complicated. Scholars around the world have been working for more than a decade to make sense of the offline social impact of the platform’s propaganda functions. And we are just beginning to get a grasp of it. The leaders of Facebook seem not to have a clue how it influences different people differently and how it warps our collective perceptions of the world. They also seem to lack anything close to a full appreciation of how people have adapted Facebook to serve their needs and desires in ways the engineers never intended.

Of a general piece with the source-spotting lists as a metric of Facebook influence is a misguided approach to reforming the platform. Just as initial Facebook boosters naïvely embraced it as an engine of democratic reform, so now an emerging cohort of Facebook critics intently documents the “truth” or “fakery” of particular items or claims. This procedural focus has driven people to promote “fact checking” on the supply side and “media education” on the demand side, as if either intervention addresses the real problem.

As sociologist Francesca Tripodi of the University of North Carolina has demonstrated, the embrace of what so many liberals like me would reject as dangerous fiction or conspiracy theories is not a function of illiteracy or ignorance. These stories circulate among highly sophisticated critics of media and texts who do, in reality, check facts, think critically, weigh evidence, and interrogate rhetoric. Like graduate students in English literature, they are adept at close reading, textual analysis, and even what passes for theory. They just do so within a completely different framework than the typical scholar or journalist would employ.

“Based on my data, upper-middle class Conservatives did not vote for Trump because they were ‘fooled’ into doing so by watching, reading, or listening to ‘fake news,’” Tripodi wrote. “Rather, they consumed a great deal of information and found inconsistencies, not within the words of Trump himself, but rather within the way mainstream media ‘twisted his words’ to fit a narrative they did not agree with. Not unlike their Protestant ancestors, doing so gave them authority over the text rather than relying on the priests’ (i.e. ‘the elites’) potentially corrupt interpretation.”

Among other things, this means seeing Facebook as a “sociotechnical system,” constituting hundreds of people (with their biases and preferences) who design and maintain the interface and algorithms as well as billions of people (with even more diverse biases and preferences) who generate their own content on the platform. This latter group contributes photos, videos, links, and comments to the system, informing and shaping Facebook in chaotic and often unpredictable ways—and its chosen patterns of engagement help us understand how and why people share disinformation, misinformation, and lies. Through this sociotechnical understanding of how Facebook works, we can recognize the complex social and cultural motivations that inform user behavior—a set of deeper collective impulses that, in media scholar Alice Marwick’s words, “will not be easily changed.”

If we recognize that the default intellectual position of most people means that identity trumps ideas, that identity shapes ideology, we can see how much work we have to do. And we can see how misunderstanding our media system—or even just the most important element of our media system, Facebook—can mislead us in our pursuit of a better life.

Technology is culture and culture is technology. So don’t let anyone draw you into a debate over “is it Facebook or we who are the problem?” Again, the answer is “yes.” We are Facebook as much as Facebook is us. Those who have studied romance novels, punk rock, and soap operas have understood this for decades. But those who build these systems and those who report on these systems tend to ignore all the volumes of knowledge that cultural scholars have produced.

Facebook works on feelings, not facts. Insisting that an affective machine should somehow work like a fact-checking machine is absurd. It can’t happen. An assumption that we engage with Facebook to learn about the world, instead of to feel about the world, misunderstands our own reasons for subscribing to a service that we can’t help but notice brings us down so often, and just might bring democracy down with us.

Perhaps the greatest threat that Facebook poses, in concert with other digital media platforms, devices, and the whole global media ecosystem, is corrosion over time. What Facebook does or does not amplify or delete in the moment matters very little in a diverse and tumultuous world. But the fact that Facebook, the leading player in the global media ecosystem, undermines our collective ability to distinguish what is true or false, who and what should be trusted, and our abilities to deliberate soberly across differences and distances is profoundly corrosive. This is the gaping flaw in the platform’s design that will demand much more radical interventions than better internal policies or even aggressive antitrust enforcement.

Standard policy interventions, like a regulatory breakup or the introduction of some higher risk of legal liability for failing to keep users safe, could slightly mitigate the damage that Facebook does in the world. But they would hardly address the deep, systematic problems at work. They would be necessary, but insufficient, moves.

Several groups, working with universities, foundations, and private actors, are experimenting with forms of digital, networked interaction that does not exploit users and treats them like citizens rather than cattle. These research groups reimagine what social media would look like without massive surveillance, and they hope to build followings by offering a more satisfying intellectual and cultural experience than Facebook could ever hope to. And most significantly, they hope to build deliberative habits. Civic Signals is one such group. Founded and run by professor Talia Stroud of the University of Texas at Austin and Eli Pariser, the social entrepreneur who coined the term “filter bubble” and wrote a book by that title, Civic Signals is an experiment in designing a platform that can foster respectful dialogue instead of commercially driven noise.

Global web pioneer and scholar Ethan Zuckerman is working on similar projects at the University of Massachusetts at Amherst, under the Institute for Digital Public Infrastructure. More than a decade ago, Zuckerman co-founded Global Voices, a network that allows people across underserved parts of the world to engage in civic journalism. Back in the heyday of democratic prophesying about the powers of the digital world, Global Voices stood out as a successful venture, and it remains strong to this day. Zuckerman still believes that offering alternative platforms can make a transformative difference. But even if they don’t, they still present an invaluable model for future ventures and reform.

While these and similar efforts deserve full support and offer slivers of hope, we must recognize the overwhelming power of the status quo. Facebook, Google, Twitter, and WeChat (the largest social media platform in China) have all the users, all the data, and remarkable political influence and financial resources. They can crush or buy anything that threatens their dominance.

Zuckerman often cites the success of Wikipedia as an alternative model of media production that has had an undeniable and largely positive role in the world (while conceding its consistent flaws in, among other things, recognizing the contributions of women). Digital enthusiasts (including myself) have long cited Wikipedia as a proof-of-concept model for how noncommercial, noncoercive, collective, multilingual, communal content creation can happen. While Wikipedians deserve all this credit, we must recognize that the world has had almost 20 years to replicate that model in any other area, and has failed.

To make room for the potential impact of new platforms and experiments, we must more forcefully, creatively, and radically address the political economy of global media. Here Zuckerman echoes some other scholars to advocate for bolder action. Like professor Victor Pickard of the University of Pennsylvania, Zuckerman promotes a full reconsideration of public-service media—not unlike how the BBC is funded in the U.K. Pickard and Zuckerman both join economist Paul Romer in advocating a tax on digital advertising—so, Facebook and Google, mainly—to maintain a fund that would be distributed to not-for-profit media ventures through some sort of expert-run committees and grantlike peer review.

I would propose a different, perhaps complementary tax on data collection. We often use stiff taxes to limit the negative externalities of commercial activities we see as harmful yet difficult or impossible to outlaw. That’s why we have such high taxes on cigarettes and liquor, for instance. While tobacco companies push the cost of their taxes to the addict, a tax on surveillance would put the cost squarely on the advertiser, creating an incentive to move ad spending toward less manipulative (and, most likely, less effective) ad platforms. The local daily newspaper might have a chance, after all, if a few more car dealerships restored their funding.

Data-driven, targeted Facebook ads are a drain on a healthy public sphere. They starve journalism of the funding that essential news outlets—especially local ones—have lived on for more than a century. They also present a problem for democracy. Targeted political ads are unaccountable. Currently, two people residing in the same house could receive two very different—even contradictory—ads from the same candidate for state Senate or mayor. Accountability for claims made in those ads is nearly impossible because they are so narrowly targeted. I have proposed that Congress restrict the reach of targeted digital political advertisements to the district in which a given race is run, so that every voter in that district—whether it’s for city council or to secure a state’s electoral votes for president—would see the same ads from the same candidates. A jurisdiction-specific regulation for such advertising would not violate the First Amendment—but it would send an invaluable signal that robust advertising regulation is both viable and virtuous.

Because anything the Congress would do would have limited influence on global companies, Congress must make taxes and restrictions strong enough to hinder bad behavior overall and force these companies to alter their defining practices and design. The problem with Facebook is not at the margins. The problem with Facebook is Facebook—that its core business model is based on massive surveillance to serve tailored and targeted content, both ads and posts. Other countries might follow the U.S. model (or the European model if, as is likelier, Europe takes a more aggressive and creative strategy toward reining in the reach of these oligopolies). One of the blessings and curses of being American is that the example this country sets influences much of the world. When we retreat from decency, democracy, and reason, it encourages others to move in the same direction. When we heal and strengthen our sense of justice and our democratic habits, we offer examples to others as well. For too long, we have been laughed at for mouthing the word “democracy” while really meaning “techno-fundamentalism.” We can no longer pretend.

But we must concede the limited reach of any local ordinance, just as we must recognize the formidable political power of industry powers. There is no profit in democracy. There is no incentive for any tech oligopoly to stop doing what it is doing. The broken status quo works exceedingly well for them—and especially for Facebook.

That’s why we also must invent or reinvest in institutions that foster deliberation. This means reversing a 50-year trend in sapping public funding for museums, libraries, universities, public schools, public art, public broadcasting, and other services that promote deep, thoughtful examination of the human condition and the natural world. We should imagine some new forums and institutions that can encourage deep deliberation and debate so we can condition ourselves to fulfill John Dewey’s hopes for an active, informed, and engaged citizenry. We can’t simultaneously hope to restore faith in science and reason while leaving the future of science and reason to the whims of private markets and erratically curious billionaires.

Facebook deserves all the scrutiny it has generated and then some. We are fortunate that Zuckerberg’s face no longer graces the covers of uncritical magazines on airport bookstore shelves, right next to glowing portraits of Elizabeth Holmes and Theranos. But the nature of that criticism must move beyond complaints that Facebook took this thing down or failed to take that thing down. At the scale that Facebook operates, content moderation that can satisfy everyone is next to impossible. It’s just too big to govern, too big to fix.

The effort to imagine and build a system of civic media should occupy our attention much more than our indictments of Facebook or Fox News do today. We know what’s wrong. We have only begun to imagine how it could be better.

Democracy is not too big to fix. But the fix will likely take a few more decades. If this plan of action seems mushy and ill-defined, that’s because it is. The struggle to foster resilient democracy has gone on for more than two centuries—and even then, we’ve never really had it. We’ve only had glimpses of it. Now that Trump is gone and Facebook is in a position to be chastened, we can’t afford to assume that democracy is restored in the United States or anywhere else. But if we can take the overlapping system failures of Trumpism and Facebookism as an opportunity to teach ourselves to think better, we have a chance.