A scientist can spend several months, in many cases even years, strenuously investigating a single research question, with the ultimate goal of making a contribution—little or big—to the progress of human knowledge.

Succeeding in this hard task requires specialized, years-long training, intuition, creativity, in-depth knowledge of current and past theories and, most of all— lots of perseverance.

As a member of the scientific community, I can say that, sometimes, finding an interesting and novel result is just as hard as convincing your colleagues that your work actually is novel and interesting. That is, the work would deserve publication in a scientific journal.

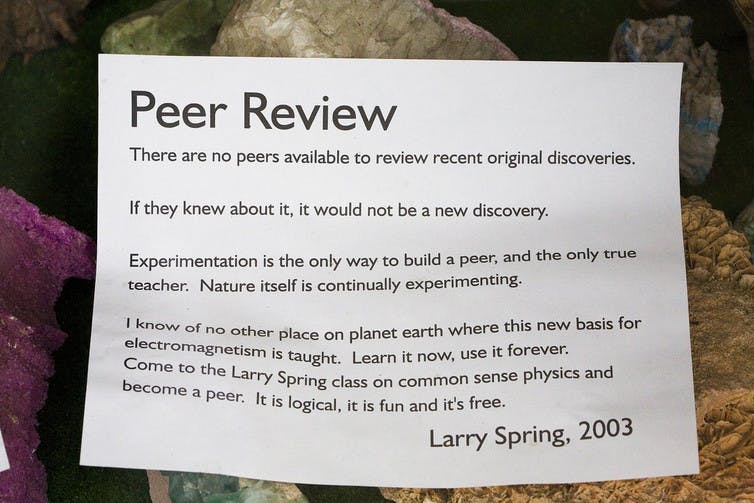

But, prior to publication, any investigation must pass the screening of the “peer review.” This is a critical part of the process—only after peer review can a work be considered part of the scientific literature. And only peer-reviewed work will be counted during hiring and evaluation, as a valuable unit of work.

What are the implications of the current publication system—based on peer review—on the progress of science at a time when competition among scientists is rising?

The impact factor and metrics of success

Unlike in math, not every publication counts the same in science. In fact, at least initially, to the eye of an hiring committee the weight of a publication is primarily given by the “impact factor” of the journal in which it appears.

The impact factor is a metric of success that counts the average past “citations” of articles published by a journal in previous years. That is, how many times an article is referenced by other published articles in any other scientific journal. This index is a proxy for the prestige of a journal, and an indicator of the expected future citations of a prospective article in that journal.

For example, according to Google Scholar Metrics 2016, the journal with the highest impact factor is Nature. For a young scientist, publishing in journals like Nature can represent a career turning point, a shift from spending an indefinite number of extra years in a more or less precarious academic position to getting a university tenure.

Given its importance, publishing in top journals is extremely difficult, and rejection rates range from 80 percent to 98 percent. Such high rates imply that sound research can also fail to make it into top journals. Often, valuable studies rejected by top journals end up in lower-tier journals.

Big discoveries also got rejected

We do not have an estimate of how many potentially groundbreaking discoveries we have missed, but we do have records of a few exemplary wrong rejections.

For example, economist George A. Akerlof’s seminal paper, “The Market for Lemons,” which introduced the concept of “asymmetric information” (how decisions are influenced by one party having more information), was rejected several times before it could be published. Akerlov was later awarded the Nobel Prize for this and other later work.

That’s not all. Only last year, it was shown that three of the top medical journals rejected 14 out 14 of the top-cited articles of all time in their discipline.

The question is, how could this happen?

Problems with peer review

It might seem surprising to those outside the academic world, but until now there has been little empirical investigation on the institution that approves and rejects all scientific claims.

Some scholars even complain that peer review itself has not been scientifically validated. The main reason behind the lack of empirical studies on peer review is the difficulty in accessing data. In fact, peer review data is considered very sensitive, and it is very seldom released for scrutiny, even in an anonymous form.

So, what is the problem with peer review?

In the first place, assessing the quality of a scientific work is a hard task, even for trained scientists, and especially for innovative studies. For this reason, reviewers can often be in disagreement about the merits of an article. In such cases, the editor of a high-profile journal usually takes a conservative decision and rejects it.

Furthermore, for a journal editor, finding competent reviewers can be a daunting task. In fact, reviewers are themselves scientists, which means that they tend to be extremely busy with other tasks like teaching, mentoring students and developing their own research. A review for a journal must be done on top of normal academic chores, often implying that a scientist can dedicate less time to it than it would deserve.

In some cases, journals encourage authors to suggest reviewers’ names. However, this feature, initially introduced to help the editors, has been unfortunately misused to create peer review rings, where the suggested reviewers were accomplices of the authors, or even the authors themselves with secret accounts.

Furthermore, reviewers have no direct incentive to do a good review. They are not paid, and their names do not appear in the published article.

Competition in science

Finally, there is a another problem, which has become worse in the last 15-20 years, where academic competition for funding, positions, publication space and credits has increased along with the growth of the number of researchers.

Science is a winner-take-all enterprise, where whoever makes the decisive discovery first gets all the fame and credit, whereas all the remaining researchers are forgotten. The competition can be fierce and the stakes high.

In such a competitive environment, experiencing an erroneous rejection, or simply a delayed publication, might have huge costs to bear. That is why some Nobel Prize winners no longer hesitate to publish their results in low-impact journals.

Studying competition and peer review

My coauthors and I wanted to know the impact such competition could have on peer review. We decided to conduct a behavioral experiment.

We invited 144 participants to the laboratory and asked them to play the “Art Exhibition Game,” a simplified version of the scientific publication system, translated into an artistic context.

Instead of writing scientific articles, participants would draw images via a special computer interface. And instead of choosing a journal for publication, they would choose one of the available exhibitions for display.

The decision whether an image was good enough for a display would then be taken following the rule of “double-blind peer review,” meaning that reviewers were anonymous to the authors and vice versa. This is the same procedure adopted by the majority of academic journals.

Images that received high review scores were to be displayed in the exhibition of choice. They would also generate a monetary reward for the author.

This experiment allowed us to track for the first time the behavior of both reviewers and creators at the same time in a creative task. The study produced novel insights on the coevolution of the two roles and how they reacted to increases in the level of competition, which we manipulated experimentally.

In one condition, all the images displayed generated a fixed monetary reward. In another condition—the “competitive condition”—the reward for a display would be divided among all the successful authors.

This situation was designed to resemble the surge in competition for tenure tracks, funding and attention that science has been experiencing in the last 15-20 years.

We wanted to investigate three fundamental aspects of competition: 1) Does competition promote or reduce innovation? 2) Does competition reduce or improve the fairness of the reviews? 3) Does competition improve or hamper the ability of reviewers to identify valuable contributions?

Here is what we found

Our results showed that competition acted as a double-edged sword on peer review. On the one side, it increased the diversity and the innovativeness of the images over time. But, on the other side, competition sharpened the conflict of interest between reviewers and creators.

Our experiment was set up in a such a way that in each round of the experiment a reviewer would review three images on a scale from 0 to 10 (self-review was not allowed). So, if the reviewer and the (reviewed) author chose the same exhibition, they would be in direct competition.

We found that a consistent number of reviewers, aware of this competition, purposely downgraded the review score of the competitor to gain a personal advantage. In turn, this behavior led to a lower level of agreement between reviewers.

Finally, we also asked a sample of 620 external evaluators recruited from Amazon Mechanical Turk to rate the images independently.

We found out that competition did not improve the average level of creativity of the images. In fact, with competition many more works of good quality got rejected, whereas in the noncompetitive condition more works of lower quality got accepted.

This highlights the trade-off in the current publication system as well.

What we learned

The experiment confirmed there is a need to reform the current publication system.

One way to achieve this goal could be to allow scientists to be evaluated in the long term, which in turn would decrease the conflict of interest between authors and reviewers.

This policy could be implemented by granting long-term funding to scientists, reducing the urge to publish innovative works prematurely and giving them time to strengthen their results in front of peer review.

Another way could imply removing the requirement of “importance” of a scientific study, as some journals, like PLoS ONE, are already doing. This would give higher chances to more innovative studies to pass the screening of peer review.

Discussing openly the problems of peer review is the first step toward solving them. Having the courage to experiment with alternative solutions is the second.

![]()

This article was originally published on The Conversation. Read the original article.