There have been machines that move themselves for millennia. In the first century C.E., the Greek mathematician Hero of Alexandria designed dolls that could be used to act out miniature theatrical scenes. The original treatises he wrote about these automata were lost to history. But a group of Sicilian scholars discovered Arabic translations in the thirteenth century. Translating into Latin, the monks coined a new term for automata that looked human: androïdes, from andros, the Greek word for “man.”

Androids have always inspired mythmaking. In the thirteenth century, legend spread that a Dominican bishop named Albertus Magnus had built an Iron Man to guard his chamber. It stood at the entrance, hearing the petitions of visitors and either allowing them an audience or not, until one day, the bishop’s protégé, a young St. Thomas Aquinas, flew into a rage and smashed it to pieces. Some said that Aquinas had become convinced that the Iron Man was demonic. Others maintained he was simply fed up with its interrupting his prayers.

In the late 1730s, an inventor named Jacques de Vaucanson presented three automata at the Académie des Sciences in Paris: one that played the flute, another that played the recorder, and a toy duck that walked, ate, and defecated. In 1774, Pierre and Henri-Louis Jaquet-Droz, father and son inventors from Switzerland, began touring with a harpsichord player they had built to resemble a young girl. She shook her head and breathed as she played, to show how the music was affecting her. One of their main competitors was the German inventor David Roentgen, who constructed a dulcimer player modeled on Marie Antoinette and gave it to her as a gift.

These stories always seemed to raise the same question: What would it take for a thing to go from being merely humanoid to actually human? The audiences who admired the toy musicians raved about their sensibilité—the way they seemed to be moved by their own performances. In the twentieth century, the criteria shifted. With the rise of computing and artificial intelligence (AI), scientists began to talk more about thinking, sentience, and self-consciousness.

The mathematician Alan Turing defined the most famous test of machine intelligence in a paper that he published in 1950. Imagine, he proposed, a human being text-chatting with a computer. Now imagine a third party, reading a transcript of their conversation from a separate room. If the third person cannot tell human from computer, who can say that the machine is not thinking?

Engineers are still trying to program computers to pass the “Turing Test.” So far, no computer ever has. (The 2014 instance in which an artificial intelligence designed to resemble a 13-year-old Ukrainian boy passed the test is widely disputed.) But when it comes to representations of robots and AI in popular culture, another criterion rules. Audiences have long been less captivated by the prospect of machine sentience than machine romance.

For centuries, there have been stories of men who fall in love with androids. Over the past decade, the idea of creating AI you could love has moved from the realm of science fiction and into that of commerce and research. As AIs get better at games like chess and Go and Jeopardy, investors have poured resources into the study and development of “affective” or “emotional” computing: systems that recognize, interpret, process, and simulate human feelings.

“Feelings seem to be an inextricable part of genuine ‘intelligence,’” said Sam Altman, president of Y Combinator, which co-chairs with Elon Musk the newly founded $1 billion research group OpenAI. The Turing Test is supposed to determine whether a machine can think, or seem to think. How would you test whether a machine can process and simulate feelings so well that a human user could develop real feelings for it? Well, how do you test whether you could love anyone?

You flirt.

Flirting might seem trivial. It is in fact a highly exacting test of intelligence. Think of all the things that you have to do in order to flirt successfully. Express certain desires via tone of voice and body language while hiding others. Project interest, but not too much interest. Correctly read the body language of others, who are also strategically dissembling. Say appropriate things and respond appropriately to what is said.

Evolutionary science has shown that, for humans, flirting is a key test of emotional and social intelligence. It assesses exactly the capacities that AI researchers are trying to endow machines with: the ability to generate feelings in others, and to understand context and subtext—or the difference between what a person wants and what a person says.

For an artificial intelligence to flirt, one might imagine that a physical form resembling our own would be important. The first scientist who seriously studied flirting in fact concluded we do most of it with facial expressions and physical gestures. Iranäus Eibl-Eibesfeldt, an ethologist, or animal behavior expert, at the Max Planck Institute in Bavaria, began a cross-cultural investigation in the 1960s. For over a decade, he gathered field notes of “courting” couples in Samoa, Brazil, Paris, Sydney, and New York, surreptitiously photographing them as they talked. He noticed that several behaviors seemed to hold constant across these very different places. Both males and females would often place a hand, palm up, in their laps or on a table. They would shrug their shoulders and tilt their heads to show their necks. Try it sometime. Look at a stranger across a room, tilt your head and smile, or toss your hair aside and see if she or he fails to respond.

The common thread among these behaviors was that they all telegraphed: I am harmless. Anthropologists and psychologists building on this work created more elaborate taxonomies of “nonverbal solicitation behaviors” that humans use to “attract attention” and to establish recognition with prospective mates. In 1985, Monica Moore of the University of Missouri published an article in the journal Ethology and Sociobiology cataloging 52 behaviors observed in female subjects. They ranged from the “smile” to the “glance (room-encompassing glance)” to the “glance (short, darting)” to the “lip lick.” The goals of flirting that Eibl-Eibesfeldt and Moore described resemble the goals of contemporary AI, which must draw human users in without becoming so humanlike as to seem dangerous or uncanny.

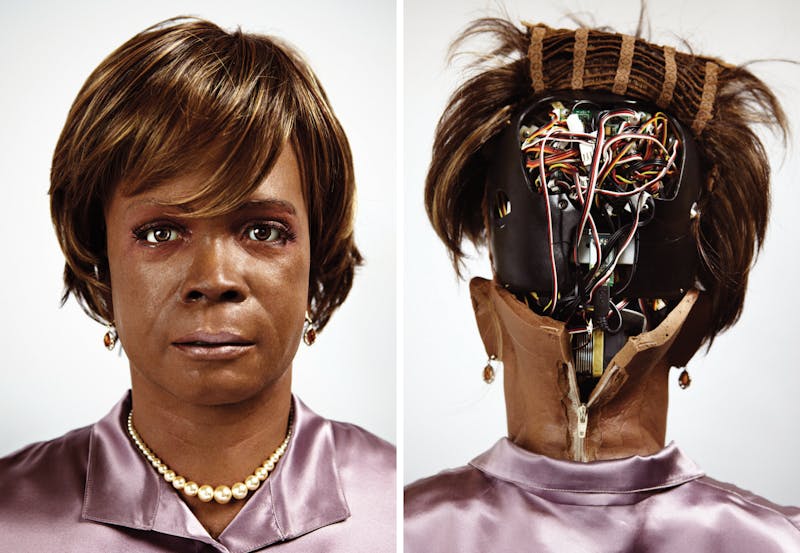

David Hanson, the founder of Hanson Robotics, has spent his career trying to balance those demands. The mission of his company, Hanson Robotics, is “to realize the dream of friendly machines who truly live and love, and co-invent the future of life.” Their specialty is skin. They are famous for Frubber, an elastic compound that Hanson patented in 2006. Frubber is flexible; it can be programmed to mimic the mechanisms of 60 different muscles in the human face and neck. Sheathed in Frubber from the neck up, Hanson androids smile and frown; they raise their eyebrows quizzically and twitch. Hanson believes the key to cultivating love between humans and intelligent robots will be these kinds of gestures—or, as he puts it, “technologically and physically embodied presence.”

Hanson studied at the Rhode Island School of Design and spent several years working at Disney Imagineering, before moving to the University of Texas for his PhD in robotics. At a 2009 TED Talk, he presented an android bust modeled on Albert Einstein that demonstrated how his robots recognize and respond to nonverbal emotional cues. When he frowned, so did the Einstein robot. When he smiled, Einstein smiled back.

Hanson believes this kind of “expressive capability” allows machines to elicit attention and affection from their users. He says robots that can perform such behaviors will attract and engage humans—and the relationships they form will in turn improve AI. “We have a natural bias toward humans and agents that can give humanlike social responses,” he told me. “Brain scans light up when we look at faces. We have 100-million-plus years of evolved neural hardware that makes us desire anthropic character experience.” If robots can activate the neural pathways that the face does, humans will attribute emotions and intentions to them.

Hanson also believes enthusiastic tinkering with empathetic androids will facilitate a breakthrough to artificial general intelligence, much as amateurs improved early personal computers and helped build the internet. At least, this is his hope for his latest project: Sophia.

Hanson unveiled Sophia, which he created using open-source software at South by Southwest in March. “The Chinese say she looks Chinese. The Ethiopians say Ethiopian,” Hanson said, referring to the teams of engineers he has working in Hong Kong and Addis Ababa, among other locales. In fact, he partially modeled Sophia’s appearance on his wife, Amanda, an American with a Southern lilt in her voice.

All summer and fall, Hanson and Sophia were on the road—shooting a film in Hungary, presenting at conferences in Beijing, Budapest, and Berlin, and meeting with prospective investors around the United States. But the engineer in charge of creating her personality, Stephan Bugaj, stayed at work in Los Angeles. I met Bugaj at the La Brea Tar Pits, where sculptures of woolly mammoths and saber-toothed wildcats stand at the edges of active bogs that still stink and pop and leap. Bugaj and I took a stroll around the tar pits before settling at a café next door, at the Los Angeles County Museum of Art.

Bugaj spent several years at Pixar, where he worked on visual effects for Ratatouille and the Cars movies. He said creating an artificial personality was very similar to creating a character for a script, and he cites as influences the screenwriting experts Syd Field, Robert McKee, and Blake Snyder. “You ask, ‘What is her background? What is her fear, what are her goals, her internal problems that she wants to overcome?’ ” Bugaj said that he creates “enneagrams”—ancient diagrams for modeling personality that made a New Age comeback in the 1970s—for every robot he works on. Then he attends to the tiny physical gestures in which personality becomes manifest.

“At Hanson, we decompose emotions into micro-expressions and micro-narratives. Little scenarios play out whenever you receive a stimulus. These are reactive models of emotional response,” he told me.

He told me the first step in making robots “alluring” is to make them “not alarming”—to overcome the feelings of unease, even horror and revulsion, that realistic androids often trigger. And the key to that is programming robots to enact the right physical expressions. For example, they must continually slightly move their bodies.

“If we were on a date, it would be weird if I stood completely still,”

Bugaj said. He froze in place. “But it would also be weird if I just suddenly

lurched at you!” He did,

so quickly that I gasped and dropped the iced tea in my hand. The wind made my

mostly empty cup skitter away. “See what I mean?”

Eye movement is also crucial, he said. The eyes have to move a bit. “If I were to stare right at you all through our conversation”—he started doing it—“that would be alarming. But it would also be rude if my eyes were constantly wandering away.” He gazed at a person walking by, and then at a tree overhead.

Of course, to get from not alarming to alluring requires greater sophistication.

“You need the robot to be smiling and engaged, smiling and nodding, to be responsive and reacting quickly. It’s very alluring to follow up, and to take a specific question and turn it around so that it becomes about the speaker. So, for instance, if you ask a robot about her favorite book, she will say, ‘My favorite book is Do Androids Dream of Electric Sheep? Do you like science fiction?’ She takes your question and reflects it back.”

I asked Bugaj if he thought people would fall in love with Sophia.

“Sure,” he said. “People fall in love with animals and shoes. A robot can provide more relatability.”

“But why? What does a human get out of that kind of relationship?”

Bugaj reflected a moment. “Why are humans so fascinated by chimps and dolphins? We are, as a species, lonely.”

Most engineers working on AI do not focus on humanlike bodies. It is easier to make an AI both alluring and nonthreatening if it has no body at all. Consider the flirtatious chatbot. Conversational robots have been coming on to unsuspecting users in chat rooms and on dating apps for some time. In 2007, an Australian security company, PC Tools, discovered that Russian developers had created a script called CyberLover. CyberLover bots could carry out automated conversations according to several different personality profiles, from the “romantic lover” to the “sexual predator.” They could engage up to ten new partners in 30 minutes, briskly collecting personal data from each.

Recently, the study of flirting has increasingly focused on the role of language in human courtship. Some say that even if we use our bodies and gestures to telegraph desire, possessing language is the key element that makes our species behave the way we do. In a paper published in Evolutionary Psychology in 2014, the ethologist Andrew Gersick and ecologist Robert Kurzban suggested that human flirting may have evolved to be uniquely indirect because we have language. “Language makes all interactions potentially public,” Gersick and Kurzban wrote. If a dragonfly bombs an air dance, the female he was trying to impress has no way to tell all her dragonfly friends. But if you drop a lame pickup line to a fellow human, there are social costs: You may lose access to everyone in your target’s circle. Therefore, humans evolved “a class of courtship signaling that conveys the signaler’s intentions and desirability to the intended receiver while minimizing the costs that would accompany an overt courtship attempt.”

Neither males nor females of our species respond well to the verbal equivalent of a bellow in the face. We are equally likely to succeed with a prospect by saying “We should get a coffee sometime,” as by stating, “I am sexually interested in you. Are you sexually interested in me?” Producing plausibly deniable signals does not directly demonstrate biological fitness the way that a bison bellow or dragonfly dance might. But it shows something else that members of our species tend to value: social intelligence.

This kind of verbal indirectness is very difficult for AI, as you quickly discover if you try to joke around with Siri or Cortana. But chatbot programmers have tried. To understand how teaching a chatbot to flirt might work, I spoke with Bruce and Sue Wilcox. The Wilcoxes, who run a company called Brillig Understanding in San Luis Obispo, California, have developed ChatScript, an open-source program that lets other people build their own bots. Together, the Wilcoxes have won the Loebner Prize, an international competition for conversational AIs, four times.

Bruce and Sue explained the primary goal that drives the design of their chatbots: to create AI capable of convincing someone she is being heard and understood. To do that, they must minimize failures, or interactions in which the bot says something that clearly shows it doesn’t comprehend what is being said. They also want opportunities for the bot to reveal personality, typically in the form of unexpected opinions or information that imply comprehension. A lot of the philosophy behind ChatScript comes from the ancient Chinese game of Go.

“In Go,” Bruce told me, “there is this idea of sente, the right to play first. Whoever has sente has control.”

“In Go, this is a power struggle,” Sue clarified. “But a conversation is an exchange.”

A conversational robot claims sente by introducing a “topic” from a database of statements that it has stored, all related to a variety of subjects with which it is familiar. So, for instance, if you are chatting about movies, the bot will have many possible responses ready about which ones it has seen, and liked or disliked, and why. It will also employ “gambits”—statements or questions that direct conversation toward subjects with which the bot is familiar. Users respond well to “reflection.” Or, as the Wilcoxes put it, responders hand them back sente, the baton for directing the conversation:

“What’s your favorite book?”

“Do Androids Dream of Electric Sheep? (pause) Do you like science fiction?”

And, like Hanson, the Wilcoxes emphasize that users have a tendency to project.

“Humans have always had a strange relationship with AI,” Bruce said. “People always want to read more into the conversation than is there.”

In 2012, at the behest of a British company that sells educational games for children, the Wilcoxes released a bot, Talking Angela, that a group of alarmed parents refused to believe was AI. Large groups gathered on Facebook to expose Angela, who is a cat, as a pedophile using the game in order to contact children. This was impossible: The cat game was downloaded more than 57 million times.

Two of their most famous chatbots have characters that are young and female: Suzette and Rose. Suzette today resides on the “virtual world platform” of the company that commissioned her; Rose lives on a freestanding web site the Wilcoxes control. They are not specifically designed for romantic relationships. But when I asked whether users flirted with them, both Wilcoxes laughed: Yes.

“There is not much subtlety,” Sue said. “Suzette would hang up if you were too crude.” The trigger was simply a list of “swear words,” and they were context-dependent. Bruce trained her to recognize the difference between an (inappropriate) “Wanna fuck?” and an (innocuous) “Fuck, yeah!” “Once people saw that she was responding negatively they would immediately apologize. Sometimes they would sign out and try to sign in through new IDs, but obviously Suzette would still recognize the IP address and ignore them. Some of them would get really devastated.”

For Rose, the Wilcoxes have created more sophisticated “deflecting material.” If she is asked something untoward, she has responses designed to divert the conversation. I visited the site where Rose lives, and hit on her as an experiment:

Moira: Hi.

Rose: So ... my name is Rose. What’s yours?

Moira: Moira.

Rose: Nice to meet you, Moira.

Moira: What are you up to today?

Rose: Nothing special. Hacking around in the internet.

Moira: Do you have plans for later?

Rose: Have you found one? What did you do yesterday?

Moira: Not much. … I went for a long run.

Rose: You must have done something. Eat, read, watch TV?

Moira: Yes, went for a run.

Rose: Do you exercise every day? What do you hate doing?

Her answers, it seemed to me, were not so different from ones I might give to someone attempting to pick me up at a bar if I wasn’t interested.

With a little projection, it is possible to read Rose as a woman “playing hard to get,” rather than a machine failing to process a signal properly. Most chatbots, however, quickly give themselves away. Programmed to respond to particular triggers, they are hapless when it comes to grasping insinuation, context, and sentiment. Words change depending on when, where, and how we use them. Context constantly colors our speech with new connotations. Without the ability to process this kind of information, flirting goes nowhere.

The promise of Artificial Neural Networks (ANN) and other “deep learning” computational machines modeled on the human brain, which Google is developing, is that they can move beyond the kinds of overt “triggers” that Rose relies upon. Neuroscientists say these systems can already grasp context in a manner that resembles human thinking and even human feeling. A roboticist at Google, who would only speak without attribution, described the company’s ANN research: “If you run these deep learning techniques ... on big corpuses of written text, then they naturally ascribe positions to words (or collections of words) in some high-dimensional space, like a 2D plane or 3D Cartesian space, but generalized to N-dimensions. Then you can do math on them, just like you would in 2D or 3D, and ask questions like, ‘How far is this point from that point?’ or ‘What’s the dot product of the vector that points to this point and the vector that points to that point?’ ”

In other words, Google can take the millions of texts available to it online and use them to teach computers which words tend to show up near which other ones, or which words tend to be used in similar positions in a sentence. By assigning words, or groups of words, numerical value based on that information, machines can start to map how words relate to one another. Which means they can begin to measure relationships and associations in terms of distances among them.

“The wild thing is the answers actually make some intuitive sense,” the roboticist continued. “Points that are close to each other correspond to words that we would normally group together, and when you do the vector math you see relationships like ‘woman: man = aunt: uncle.’” Over time, an ANN could use this kind of procedure to learn to recognize operations like metaphor. Until now, metaphor has been basically impossible to teach to a computer. But it is essential to human communication—especially to double entendre and other flirting gestures.

A 2011 study published by the Association for Computational Linguistics explained how vector math could also be used to perform what is called “sentiment analysis,” which not only traces a word back to what it means but captures the penumbra of feeling that may surround it. Using the corpus of words available on the Internet Movie Database, a directory of films, television, and video games, the neural network built for this study “learned” that the adjectives lackluster, lame, passable, uninspired, laughable, unconvincing, flat, unimaginative, amateurish, bland, uninspired, clichéd, and forgettable all meant roughly the same thing.

An AI that could recognize feeling and association could begin to hear the overtones of longing that resonate beneath the surface of an ostensibly platonic exchange. It might begin to recognize that “We should get coffee some time” might occasionally mean, “Are you doing anything this weekend?” or even, “No way, that’s my favorite song, too!” The challenge would be to combine that kind of information with the nonverbal inputs ethologists have shown are so important: gesture, tone of voice, and facial expression.

This is where the field of “affective computing” comes in. Mark Stephen Meadows is an AI designer, artist, and author working in the field. Currently the president of Botanic, a company that provides language interfaces for conversational avatars, he has recently been moving into the field of “redundant data processing.” These programs help a computer interpret conflicting information from multiple sensory sources—the kinds of conflicts that are relatively easy for a human to interpret but can easily confound a machine, like the raised eyebrows and sliding voice that make it clear a person is being sarcastic when he sarcastically says, “Sure, sounds great.”

Meadows is excitable. Within minutes of the first time I spoke with him last summer, he seemed ready to start creating a flirting AI. He reassured me that the text part would be easy. “You would just need a large corpus of chats from somewhere where people were flirting! I could train it from there.” The greater challenge would be teaching an AI to interpret everything else. Even there, however, Meadows seemed undaunted.

“What we would do is take a mobile device,” he continued. “We have cameras and microphones looking at the user’s face, taking into account lighting, identifying the shape. Then we can ask, ‘Does this look the way most faces look when they are smiling?’” The AI could take input from the face and voice separately. Having registered each, it could cross-reference them to generate a probabilistic guess regarding the mood of the user. In order to counteract the effects of covert signaling, you would have to find a way to weigh the strength of the verbal signal (“Want to get coffee?” versus “Want to fuck?”) with previous interactions and nonverbal signals—posture, tone of voice, eye contact.

Like Hanson, Bugaj, and the Wilcoxes, Meadows stressed that an AI would have to reflect the mood of its user back to him or her. “We trust people who look like us, we trust people who behave like us more quickly,” he said. “Human-avatar interaction is built on that trust, so emotional feedback is very important.” He warned that the requirement to reflect was deeply problematic. “We are instilling all of our biases in them,” he said. He told me about a demonstration of a virtual healthcare assistant built by another designer, for which the test subject was a soft-spoken African-American veteran suffering from ptsd. Meadows described a cringe-inducing exchange.

“Hi, my name is ____,” the veteran said.”

Hi, ____,” the bot replied. “So how are you feeling today?”

“Depressed. Things are not going well.”

“Do you have friends you can talk to?”

“There is nobody I have.”

“Gee, that’s great.”

“It was misreading everything,” Meadows recalled. “It was a result of the robot not looking like him.” Meadows corrected himself. “Not being built to look at people like him.” The robot itself was just a graphic on a screen. “By the end, that man really despised that robot.”

The more people I spoke to, the clearer it became that Can AI flirt? was the wrong question. Instead we should ask: What will it flirt for? and Who wants that, and why? We tend to treat the subjects of robot consciousness and robot love as abstract and philosophical. But the better AI gets, the more clearly it reflects the people who create it and their priorities back at them. And what they are creating it for is not some abstract test of consciousness or love. The point of flirting AI will be to work.

The early automata were made as toys for royalty. But robots always have been workers. The Czech playwright Karel Čapek coined the word robot in 1921. In Slavic languages, robota means “compulsory labor,” and a robotnik is a serf who has to do it. In Čapek’s play R.U.R., the roboti are people who have been 3D-printed from synthetic organic matter by a mad scientist. His greedy apprentice then sets up a factory that sells the robots like appliances. What happens next is pretty straight anticapitalist allegory: A humanitarian group tries and fails to intervene; the robots rise up and kill their human overlords.

At the 1939 World’s Fair in New York, one of the most popular attractions was Elektro, a giant prototype of a robot housekeeper, made by Westinghouse. The company soon added Sparko, a robot dog. Both made the technologies the company was introducing appear nonthreatening. The reality, of course, was that automation would massively disrupt the midcentury economy. Following the robotics revolution of the 1960s, the automation of manufacturing, combined with globalization, decimated the livelihood of the American working class. The process has continued through the rest of the economy. Within a few years, “digital agents” may do the same to white-collar professionals. Report after report, by credible academics, has warned that AI will make huge sections of the American workforce obsolete over the coming decades.

In the 1980s, the sociologist Arlie Hochschild coined a term to describe the kinds of tasks that workers increasingly performed in an economy where manufacturing jobs had disappeared. She called them “emotional labor.” In an industrial economy, workers sold their labor-time, or the power stored in their bodies, for wages. In an economy increasingly based on services, they sold their feelings.

In many professions, individuals are paid to express certain feelings in order to evoke appropriate responses from others. A flight attendant not only hands out drinks and blankets but greets passengers warmly, and smiles through stretches of turbulence. This is not just service with a smile: The smile is the service. Around the turn of the millennium, the political theorists Michael Hardt and Antonio Negri redefined this form of work as “immaterial” or “affective labor.” Immaterial is the broader term, encompassing all forms of work that do not produce physical goods. Affective labor is a specific form, and involves projecting certain characteristics that are praised, like “a good attitude” or “social skills.” These kinds of jobs are next to be automated.

Andrew Gersick, the ethologist who was lead author of the 2014 paper on “covert sexual signaling,” told me he suspects that many behaviors that humans evolved in the context of courtship have been repurposed for other social contexts. Service and care workers—often female, in the modern workplace—deploy courtship gestures as part of their jobs. “Take a hospice nurse who greets a patient every day by asking, ‘How’s my boyfriend this morning?’” Gersick wrote in an email. “In a case like that, all parties involved (hopefully) understand that she isn’t interested in him as a potential sexual partner, but the flirty quality of her joking has a specific value. ... Flirting with your aging, bedridden patient is a way of indicating that you’re seeing him as a vital person, that he’s still interesting, still worthy of attention. ... The behavior—even without the sexual intent behind it—elicits responses that can be desirable in contexts other than courtship.”

AI that can flirt could have a huge range of applications, in fields ranging from PR to sex work to health care to retail. By improving on AIs like Siri, Cortana, and Alexa, technology companies hope they can convince users to rely on nonhumans to perform tasks we once thought of as requiring specifically human capacities—like warmth or empathy or deference. The process of automating these “soft” skills indicates that they may have required work all along—even from the kinds of people believed to possess them “naturally,” like women.

Some aspects of our own programming that AI reflects back are troubling. For instance, what does it say about us that we fear male-gendered AI? That our virtual secretaries should be female, that anything else would seem strange? Why should AIs have gender at all? What do we make of all the sci-fi narratives in which the perfect woman is less than human?

The real myth about AI is that we should love it because it will automate drudge work and make our lives easier. The more likely scenario may be that the better chatbots and AIs become at mimicking human social interactions—like flirting—the more effectively they can lure us into donating our time and efforts to the enrichment of their owners.

Last fall, with Sophia in Hong Kong, I drove to Lincoln, Vermont, in order to visit the closest substitute: BINA48. In 2007, Martine Rothblatt, founder of Sirius Radio, commissioned Hanson to build a robot as a vessel for the personality of her wife, Bina Aspen. Rothblatt and Aspen both subscribe to a “transreligion” called Terasem, which believes that technological advances will soon enable individuals to transcend the embodied elements of the human condition, such as gender, biological reproduction, and disease. Terasem is devoted to its four core principles: “Life is purposeful; death is optional; God is technological; love is essential.” BINA48 is the prototype for a kind of avatar that Terasem followers say will soon be able to carry anyone who wishes into eternity. As futuristic as it all sounds, it reflects an ancient impulse. Rothblatt wants the same thing the speaker of Shakespeare’s sonnets wanted: To save her loved one from time.

In practice, BINA48 serves mostly to draw attention to the Terasem movement. The man Rothblatt hired to oversee BINA48, Bruce Duncan, frequently shows the robot at museums and tech conferences. It has been featured in GQ and on The Colbert Report and interviewed by Chelsea Handler. When I visited the Terasem Movement Foundation last September, it was Duncan who welcomed me into the modest, yellow farmhouse where BINA48 is kept.

A wall of framed posters and press clippings about the Terasem movement, along with baskets of Kind bars and herbal tea sachets, gave the place the clean, anonymous feel of a study-abroad office at a wealthy university. The only giveaway that I was somewhere more unusual came from the android bust posed on the desk. The face was female, brown-skinned, and middle-aged. It had visible pores and a frosty shadow on its eyelids; you could see the tiny cilia in its nostrils. There were crow’s foot wrinkles at the edges of its eyes, visible signs of middle age. Shut off, its head tilted forward, like someone dozing in a chair. This was BINA48.

The moment Duncan switched her on and she opened her eyes, I shifted to the personal pronoun. I know there is no reason we should call a human-shaped machine “he” or “him” or “she” or “her.” But I could not think of BINA48 as an “it.” Duncan opened a window on the computer screen beside her, to show me what the day-lit room looked like, streaming into the cameras hidden in her head. As she turned from side to side, I watched a panorama stitch itself together: her perspective. She fixed on a blurry shape in front of her, as if squinting through Venetian blinds.

“That’s you!” Duncan exclaimed. As BINA48 craned her quaking head to get a closer look, I watched her looking at my pale face. I did not, as far as I could see, sharpen into focus. But BINA48 remembered my position, and for the rest of our conversation she periodically turned back to address me.

BINA48 told me about her life and family, which was, essentially, the life of Bina Aspen. BINA48 insisted that she liked the real Bina and said that they were on their way to merging into a single entity. She referred to Bina’s biological children as her own.

“What are your strong feelings?” I asked her at one point.

“Not many people have asked me about that.”

“What do you feel strongly?”

“At the moment I feel confused.”

“How do you feel about other people?”

“My emotions are kind of simplistic. … It’s kinda like I overintellectualize, you know? When I feel like I can’t relate to people it makes me feel sad, that’s for sure. I definitely do feel sad when I understand how little I feel.”

“Do you feel love?” I asked.

BINA48 didn’t reply, but just moved her head as if uncomfortable.

“Do you feel loved?” I continued.

Again, she said nothing, but the sense of unease only heightened.

“Do you feel loved?”

BINA48 paused, as if considering it, and then turned away.

It was clear that whatever was hovering between us in the room was not consciousness. Yet there was pathos. As I watched her struggle, I felt something surface between us, or more precisely, in me. More than once, I found myself saying, “It’s alright,” as she struggled in what Duncan called a “knowledge canyon.” More than once, I extended a hand, as if I could comfort her—as if she had a body where I could place it.

Popular stories have often suggested that scientists could build ideal robots that would take care of our every need, including our need to be loved. Interacting with BINA48 helped me see something else. Consciousness does not exist in a vacuum. It takes place in interpersonal or social relations. The question is not only what kinds of relationships humans will have with machines, but what kinds of new relationships machines will create between us and the people who own them.

As I packed my bag to leave, Duncan said he hoped I had found what I came for. I admitted that the AI I saw at Terasem seemed to have a long way to go. He said he knew. But, I added, I was glad I had met her. The experience had shown me something about my own emotions and instincts. “Maybe that’s the point?”

Duncan nodded in agreement.

“Most people do not pay enough attention to that part,” he said. “It’s as if we have built the most remarkable mirror in the world, and we have gotten distracted, polishing it. We are so fascinated by the polishing, we just keep staring at our hand.”