The game is simple, designed for a child and intended to teach users about diet and diabetes. I sit opposite Charlie, my diminutive fellow player. Between us is a touch screen. Our task is to identify which of a dozen various foodstuffs are high or low in carbohydrate. By dragging their images we can sort them into the appropriate groups.

Charlie is polite, rising to greet me when I join him at the table. We proceed, taking turns, congratulating each other when we make a right choice, and murmuring conciliatory comments when we don’t. It goes well. I’m beginning to take to Charlie.

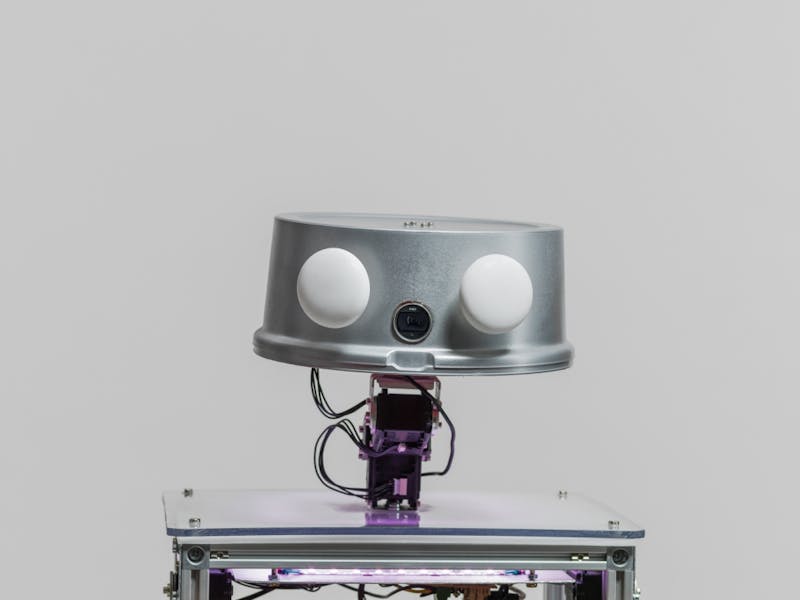

But Charlie is a robot, a two-foot-tall electromechanical machine, a glorified computer. It may move, it may speak, but it is what it is: a machine that happens to look humanoid. How can I ‘take’ to it?

Charlie’s intended playmates aren’t sixty-something Englishmen, they’re children. Children naturally interact with dolls, imagining them to be sentient beings. It’s a part of childhood. But I’m an adult, for God’s sake. I should have put away such responses to dolls… shouldn’t I?

In truth my reaction to Charlie, far from being odd or childish, is pretty typical. Robots, of course, are hardly new. Over the last few decades we’ve had industrial devices that assemble cars, vacuum our floors and shunt stuff around warehouses. But the 2010s have seen a rise in the attention paid to robots of the kind that most of us still think of as robots: autonomous machines that can sense their surroundings, respond, move, do things and, above all, interact with us humans. We all recognize R2-D2, WALL-E and scores of their lesser-known kin. The unnerving thing is that their nonfictional counterparts are extremely close at hand. Some press stories are exotic—those about ‘sexbots’ being among the more sensational—but many have featured robots at the less hedonic end of social need: disability and old age.

This has set me wondering how I might cope with the experience—not for an hour or a day, but for months, years. Not tomorrow, but very soon, I will have to get used to the idea of living with robots, most likely when I’m elderly and/or infirm. Contemplating this, my line of thought has surprised and disturbed me.

Modern medicine and increasing longevity have conspired to boost the need for social care, whether in the home or in institutions. “There’s a pressing requirement for robots in the social care of the elderly, partly because we have fewer people of working age,” says Tony Belpaeme, Professor in Intelligent and Autonomous Control Systems at Plymouth University. Traditionally among the poorest paid of the workforce, carers are an ever more scarce resource. Policy makers have begun to cast their eyes towards robots as a possible source of compliant and cheaper help.

The robots already in production, Belpaeme tells me, are principally geared to monitoring the elderly and infirm, or providing companionship while, as yet, performing only the most straightforward of physical tasks. Wait… companionship? “Yes,” says Belpaeme, deadpan, “Of course it would be better to have companionship from people…” He points out that for all sorts of reasons this can’t always be achieved. “Studies have shown that people don’t mind having robots in the house to talk to. Ask the elderly subjects who take part in these studies if they’d like to have the robot left in the house for a bit longer, and the answer is nearly always yes.”

Consider our relationship with nonhuman entities of a different type: animals. The ancient bonds between us have changed, of course: hunting, transport, protection and other such necessities have slipped to a secondary role. The predominant function of domestic animals in advanced industrial societies is companionship.

When medical researchers started to take an interest in the health effects of pet ownership, they began to find all sorts of beneficial consequences, physical as well as mental. Though somewhat debated, these include reductions in distress, anxiety, loneliness and depression, as well as a predictable increase in exercise. Pets seem to reduce cardiovascular risk factors such as serum triglyceride and high blood pressure.

The pleasures of animals as companions—and the real distress that may follow their loss or death—are self-evident. Research in Japan has revealed a biological and evolutionary basis to the relationship, at least in so far as it applies to one group of pets. Japanese scientists measured the blood levels of oxytocin in dogs and their owners, had them gaze at one another for an extended period, then repeated the measurements.

If you already know that oxytocin is the hormone associated with building a bond between mothers and their babies, you’ll guess where this is going. Dogs have enjoyed a long period of domestication, during which their psychology as well as their physical attributes have been subject to intense selection. What the Japanese researchers found was that periods of mutual eye contact raised the oxytocin levels in both parties. In short, they uncovered the physiological basis of loving your dog.

Whether on account of chemistry or for other reasons, there is evidence that the majority of pet owners see their animals as part of the family. “This doesn’t mean they regard them as humans,” says Professor Nickie Charles, a University of Warwick sociologist with a particular interest in animal–human relationships. Close links with animals are often in addition to rather than instead of relationships with family and friends. “But pets are easier and more straightforward, some owners say.”

The suggestion that nonliving things, including robots, might be able to evoke human responses that are quantitatively and even qualitatively comparable to our feelings about animals is contentious. Yet the evidence of common experience suggests that this is the case, even if we might not admit it or feel faintly uncomfortable if we do.

Who hasn’t shouted at a failing machine? The first vehicle I owned was a decrepit van that struggled even on modest inclines. More than once when driving the wreck I found myself putting an arm out through the window and using the flat of my hand to beat the door panel—like a rider on a horse’s flank. “Come on, come on,” I shouted at the dashboard. Only later did I contemplate the absurdity of this action.

Some such behavior is simply the relief of pent-up tension or anger—but not all. Think back to the mid-1990s and the advent of small egg-shaped electronic devices with a screen and a few buttons. They were called Tamagotchis. Bandai, the original Japanese manufacturer, described a Tamagotchi as “an interactive virtual pet that will evolve differently depending on how well you take care of it. Play games with it, feed it food and cure it when it is sick and it will develop into a good companion.” Conversely, if you neglected your Tamagotchi, it died. For a time, millions of children and even adults became willing slaves to the demands of these computerized keychain taskmasters.

Also from Japan is PARO. Modeled on a baby harp seal and weighing a couple of kilos, it’s slightly larger than a human infant. PARO made its debut more than a decade ago, and although the majority of the 4,000 sold remain in Japan, PAROs can now be found in more than 30 other countries.

Covered in soft white fur, PARO responds to touch, light, temperature and speech sounds—as I discover when I try stroking and even talking to the creature sitting on the table in front of me. It turns its head to me when I speak; it emits seal-like squeaks when I stroke it; and when ‘content’, it slowly lowers its head and closes its big appealing eyes, each kitted out with seductively long thick lashes. This blatant emotional manipulation is accentuated when I pick PARO up; cradled in my arms, it begins to wriggle as I go through my talking and stroking routine.

I encounter PARO at the London offices of the Japan Foundation, where it has accompanied its inventor, Takanori Shibata, an engineer at the Japanese National Institute of Advanced Industrial Science and Technology. Shibata categorises PARO’s benefits under three headings: psychological (it relieves depression, anxiety and loneliness), physiological (it reduces stress and helps to motivate people undergoing rehabilitation) and social. In this last category, he says, “PARO encourages communication between people, and helps them to [interact with] others”—social mediation, to use the technical term. As Shibata points out, “PARO has many of the same effects as animal therapy. But some hospitals do not allow animals because of a lack of facilities or the difficulties of managing pets.” Not to mention worries over hygiene and disease.

Much of the evidence of the benefit from PARO is based on informal observation (though there have also been more controlled trials). In one pilot study, three New Zealand researchers investigated a small group of residents in a care home for the elderly. Each resident spent a short period handling, stroking and talking to a PARO. This activity triggered a fall in blood pressure comparable to that following similar behavior with a living pet.

In my brief period handling PARO, I can’t say I felt anything more than mild amusement—and certainly not companionship. Dogs and cats can do their own thing; they can ignore you, bite you or leave the room. Simply by staying with you they’re saying something. PARO’s continuing presence says nothing.

But then I’m not frail, isolated, lonely or living in a care home. If I were, my response might be different, especially if I was becoming demented, one of the conditions for which PARO therapy has generated particular interest. Shibata reports that his robots can reduce anxiety and aggression in people with dementia, improve their sleep and limit their need for medication. The robots also lessen the patients’ hazardous tendency to go wandering and boost their capacity to communicate.

This value as a social mediator interests Amanda Sharkey and colleagues at the University of Sheffield. “With dementia in particular it can become difficult to have a conversation, and PARO can be useful for that,” she says. “There is some experimental evidence, but it’s not as strong as it might be.” She and her colleagues are setting up more rigorous experiments. But the calculated use of a PARO for companionship she actually finds worrying. “You might begin to imagine that your old person is taken care of because they’ve got a robot companion. It could be misused in a care home by thinking, ‘Oh well, don’t bother to talk to her, she’s got the PARO, that’ll keep her occupied.’” I raise this with Shibata. He insists it isn’t a risk but, despite my pressing the point, is unable to say why it couldn’t happen.

Reid Simmons of the Robotics Institute at Carnegie Mellon University tells me that it doesn’t make sense to pretend you can create a robot that serves our physical needs without evoking some sense of companionship. “They’re inextricably linked. Any robot that is going to be able to provide physical help for people is going to have to interact with them on a social level.” Belpaeme agrees. “Our brains are hard-wired to be social. We’re aware of anything that is animate, that moves, that has agency or that looks lifelike. We can’t stop doing it, even if it’s clearly a piece of technology.”

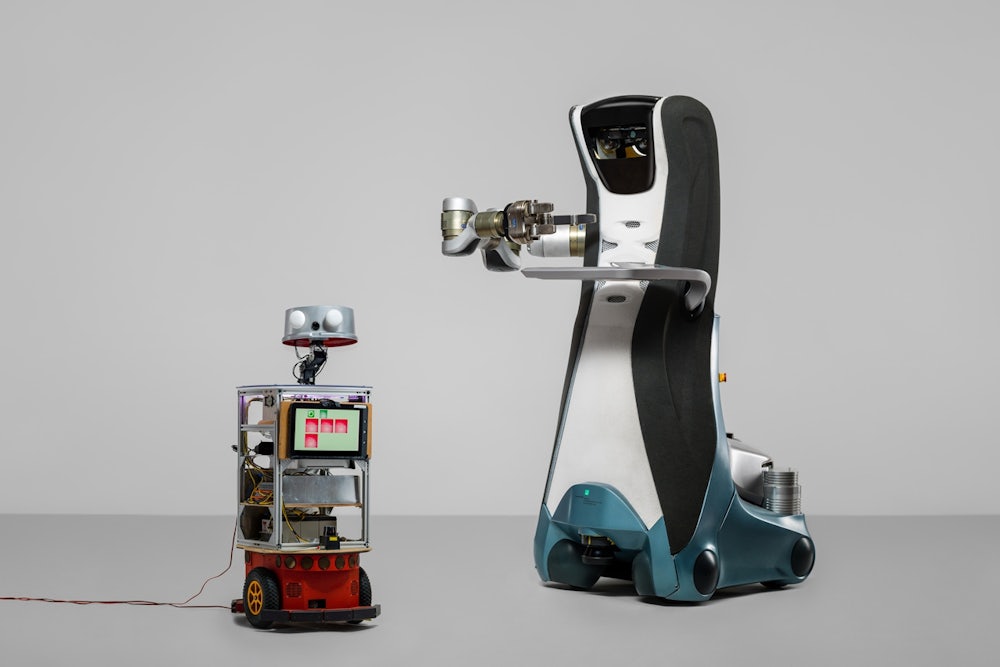

Hatfield, Hertfordshire. An apparently normal house in a residential part of town. Once through the front door I’m confronted by a chunky greeter, standing at just below my shoulder height. Its black-and-white color scheme is faintly penguin-like, but overall it reminds me of an eccentrically designed petrol pump. It’s called a Care-O-bot. It doesn’t speak, but welcomes me with a message displayed on a touch screen projecting forward of its belly region.

Care-O-bot asks me to accompany it to the kitchen to choose a drink, then invites me to take a seat in the living room, following along with a bottle of water carried on its touch screen, now flipped over to serve as a tray. My mechanical servant glides silently forwards on invisible wheels, pausing to perform a slow and oddly graceful pirouette as it confirms the location of other people or moveable objects within its domain. Parking itself beside my table, Care-O-bot unfurls its single arm to grasp the water bottle and place it in front of me. Well, almost—it actually puts it down at the far end of the table, beyond my reach. Five minutes in Care-O-bot’s company and already I’m thinking of complaining about the service.

The building I’m in—they call it the robot house—is owned by the University of Hertfordshire. It was bought a few years ago because a university campus laboratory is not an ideal setting in which to assess how experimental subjects might find life with a robot in an everyday domestic environment. A three-bedroom house set among others in ordinary use provides a more realistic context.

The ordinariness of the house is, of course, an illusion. Sensors and cameras throughout it track people’s positions and movements and relay them to the robots, and it’s this, rather than my box-shaped companion, that I find more perturbing. Also monitored are the activity of kitchen and all other domestic appliances, whether doors and cupboards are open or closed, whether taps are running—everything, in short, that features in our activities of daily living.

Joe Saunders, a research fellow in the university’s Adaptive Systems Research Group, likens Care-O-bot to a butler. Decidedly unbutlerish is the powerful articulated arm that it kept tucked discretely behind its back until it needed to serve my water. An arm “powerful enough to rip plaster off the walls,” says Saunders cheerfully. “This robot’s a research version,” he adds. “We’d expect the real versions to be much smaller.” But even this brute, carefully tamed, has proved acceptable to some 200 elderly people who’ve interacted with it during trials in France and Germany as well as at Hatfield.

As Tony Belpaeme pointed out to me, the robots we have right now don’t have the skills that are most needed: the ability to tidy houses, help people get dressed and the like. These things, simple for us, are tough for machines. Newer Care-O-bot models can at least respond to spoken commands and speak themselves. That’s a relief because, to be honest, it’s Care-O-bot’s silence I find most disconcerting. I don’t want idle chatter, but a simple declaration of what it’s doing or about to do would be reassuring.

I soon realize that until the novelty of this experience wears off, it’s hard for me to judge what it might feel like to share my living space with a mobile but inanimate being. Would I find an advanced version of Care-O-bot—one that really could fetch breakfast, do the washing up and make the beds—difficult to live with? I don’t think so. But what of more intimate tasks—if, for example, I became incontinent? Would I cope with Care-O-bot wiping me? If I had confidence in it, yes, I think so. It would be less embarrassing than having the same service performed by another human.

After much reflection, I think adjusting to the physical presence of a robot is the easy bit. It’s the feelings we develop about them that are more problematic. Kerstin Dautenhahn, of the Hatfield robot house, is Professor of Artificial Intelligence in the School of Computer Science at the University of Hertfordshire. “We are interested in helping people who are still living in their own homes to stay there independently for as long as possible,” she says. Her robots are not built to be companions, but she recognizes that they will, to a degree, become companions to the people they serve.

“If a robot has been programmed to recognize human facial expressions and it sees you are sad, it can approach you, and if it has an arm it might try to comfort you and ask why you’re sad.” But, she says, it’s a simulation of compassion, not the real thing. I point out that many humans readily accept affection, if not compassion, from their pets. She counters that a dog’s responses have not been programmed. True. But future advances in artificial intelligence could blur the distinction, particularly if a robot had been programmed to program itself by choosing at random from a wide array of possible goals, purposes and character traits. Such an approach might lead to machines with distinct and individual personalities.

“Behaving socially towards reactive or interactive systems is within us, it’s part of our evolutionary history,” she tells me. She’s content to see her robots providing supplementary companionship, but she is aware that care providers with tightly stretched budgets may have little incentive to become overconcerned if a robot does seem to be substituting for human contact.

As I leave the robot house this worries me too. But it also puzzles me. If dogs, cats, robot seals and egg-shaped keyrings can so easily evoke feelings of companionship, why should I be exercised about it?

Charlie, the robot I played the sorting game with, is designed to entertain children while helping them learn about their own illnesses (Charlie is also used in a therapy for children with autism). When children are introduced to Charlie, they’re told that it too has to learn about their illness, so they’ll do it together. They’re told the robot knows a bit about diabetes, but makes mistakes. “This is comforting for children,” says Belpaeme. “If Charlie makes a mistake they can correct it. The glee with which they do this works well.” Children bond with the robot. “Some bring little presents, like drawings they’ve made for it. Hospital visits that had been daunting or unpleasant can become something to look forward to.” The children begin to enjoy their learning, and take in more than they would from the medical staff. “In our study the robot was not a second-best alternative, but a better one.”

Charlie is a cartoon likeness of a human. A view widely held by researchers, and much of the public, is that robots should look either convincingly human or obviously not human. The more a machine looks like us the more we’ll relate to it—though only up to a point. A very close but imperfect similarity tends to be unsettling or even downright disturbing. Robotics professionals refer to what they call the ‘uncanny valley’; in short, if you can’t achieve total perfection in a robot’s human-like appearance, back off. Leave it looking robot-like. This is rather convenient—a version of Charlie indistinguishable from you and me could price itself out of the market. That doesn’t mean it shouldn’t simulate our actions, however. A robot that doesn’t move its hands, for example, looks unnatural. “If you look at people when they’re talking, they don’t stay still,” says Belpaeme, pointing at Charlie and a child engrossed in conversation. “Besides their lips and tongues, their hands are moving.”

The angst we generate over adults forming relationships with robots seems not to be applied to children. Consider the role of dolls, imaginary friends and such like in normal childhood development. To start worrying about kids enjoying friendships with robots seems, to me, perverse. Why then am I so anxious about it in adult life?

“I don’t see why having a relationship with a robot would be impossible,” says Belpaeme. “There’s nothing I can see to preclude that from happening.” The machine would need to be well-informed about the details of your life, interests and activities, and it would have to show an explicit interest in you as against other people. Current robots are nowhere near this, he says, but he can envisage a time when they might be.

Dautenhahn hopes that robots never become a substitute for humans. “I am completely against it,” she says, but concedes that if that’s the way technology progresses, there will be little that she or her successors can do about it. “We are not the people who will produce or market these systems.” Belpaeme’s ethical sticking point—and others usually say something similar—would be the stage at which robot contact becomes preferred to human contact. But in truth, that’s not a very high bar. Many children already trade many hours of playing with their peers for an equivalent number online with their computers.

In the end, of course, the question becomes not ‘Do I want a robot companion to care for me?’ but ‘Would I accept being cared for by a robot?’ If the time comes when I am still compos mentis but physically infirm, would I be prepared for the one-armed Care-O-bot to take me to the toilet, or PARO to be my couch companion during movies?

There are cultural considerations here. The Japanese, for example, treat robots matter-of-factly and appear more at ease with them. There are two theories about this, according to Belpaeme. One attributes it to the Shinto religion, and the belief that inanimate objects have a spirit. He himself favors a more mundane explanation: popular culture. There are lots of films and TV series in Japan that feature benevolent robots that come to your rescue. When we in the West see robots on television they are more likely to be malevolent. Either way, though, I’m not Japanese.

On a simple level of practicality there’s a way to go before Mr Care-O-bot or any of its kind have the communication skills, dexterity and versatility of even the most cack-handed human carer. But assuming the engineers overcome this hurdle—and I’ve every reason to believe they will, very soon—I’m back to the question of companionship. Life devoid of it is sterile. So the fact that we tend naturally to form bonds, even with robots, I find, in principle, encouraging.

But companionship, to my mind, incorporates three key ingredients: physical presence, intellectual engagement and emotional attachment. The first of these is not an issue. There’s my Care-O-bot, ambling about the house, responsive to my call, ready to do my bidding. A bit of company for me. Nice.

The second ingredient has yet to be cracked. Intellectual companionship requires more than conversations about the time of day, the weather, or whether I want to drink orange juice or water. Artificial intelligence is moving rapidly: in 2014 a chatbot masquerading as a 13-year-old boy was claimed to be the first to pass the Turing test, the famous challenge—devised by Alan Turing—in which a machine must fool humans into thinking that it, too, is human.

That said, the bar is fooling just 30 per cent of the judging panel—Eugene, as the chatbot was called, convinced 33 per cent, and even that is still disputed. The biggest hurdle to a satisfying conversation with a machine is its lack of a point of view. This requires more than a capacity to formulate smart answers to tricksy questions, or to randomly generate the opinions with which even the most fact-laden of human conversations are shot through. A point of view is something subtle and consistent that becomes apparent not in a few hours, but during many exchanges on many unrelated topics over a long period.

Which brings me to the third and most fraught ingredient: emotional attachment. I don’t question this on feasibility counts because actually I think it will happen anyway. In the film Her, a man falls in love with the operating system of his computer. Samantha, as he calls her, is not even embodied as a robot; her physical presence is no more than a computer interface. Yet their affair achieves a surprising degree of plausibility.

In the real world there is—so far—no attested case of the formation of any such relationship. But some psychologists are, inadvertently, doing the groundwork through their attempts to develop computerized psychotherapy. These date back to the mid-1960s when the late Joseph Weizenbaum, a computer scientist at the Massachusetts Institute of Technology, devised a program called ELIZA to hold psychotherapeutic conversations of a kind. Others have since followed his lead. Their relevance in this context is less their success (or lack of it) than the phenomenon of transference: the tendency of clients to fall in love with their therapists. If the therapist just happens to be a robot… well, so what?

The quality and the meaning of such attachments are the key issues. The relationships I value in life—with my wife, my friends, my editor—are emergent products of interacting with other people, other living systems comprising, principally, carbon-based molecules such as proteins and nucleic acids. As an ardent materialist I am not aware of evidence to support the vitalist view that living things incorporate some ingredient which prevents them being explained in purely physical and chemical terms. So if silicon, metal and complex circuitry were to generate an emotional repertoire equal to that of humans, why should I make distinctions?

To put it baldly, I’m saying that in my closing years I would willingly accept care by a machine, provided I could relate to it, empathize with it and believe that it had my best interests at heart. But that’s the reasoning part of my brain at work. Another bit of it is screaming: What’s the matter with you? What kind of alienated misfit could even contemplate the prospect?

So, I’m uncomfortable with the outcome of my investigation. Though I am persuaded by the rational argument for why machine care should be acceptable to me, I just find the prospect distasteful—for reasons I cannot, rationally, account for. But that’s humanity in a nutshell: irrational. And who will care for the irrational human when they’re old? Care-O-bot, for one; it probably doesn’t discriminate.