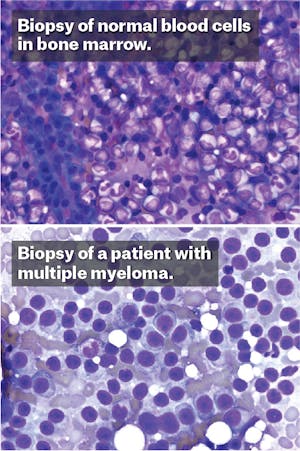

One early spring morning in 2009, Helen set off from the starting line of Boston’s Run to Remember, an annual half-marathon held each year. She jogged alongside the Charles River, through the public gardens, across the bridge into the city of Cambridge. The weather was cool and serene, the oaks had burst into an early green, and she felt her body working in synchrony. But soon after getting home, her runner’s high was overcome by a dull ache that flared deep in her legs. At first, she was sure the pain came from shin splints. But when it refused to abate, she consulted with her doctor who saw that her blood counts had plummeted. After visiting a hematologist, the doctors put a needle through her skin and pierced the hard shell of her hip to collect the soft marrow underneath. A few days later, the results came back. “I had multiple myeloma,” she whispered to me, as though still in shock, when we met this past fall.

As a doctor—though not her doctor—I could understand why she might still be shocked. Multiple myeloma is an uncommon cancer that usually afflicts elderly Americans, but Helen* was a cruel statistical exception: She was only 38 at the time of diagnosis, giving her a life expectancy of only about six years. Facing that grim prognosis, Helen began to wonder if this was her fault—if she had done something wrong. Still a young woman, married, employed, with two children at home, she exercised regularly, never smoked, rarely drank, and hardly ever got a cold. What in my life, she wondered, was to blame?

Helen’s doctors encouraged her to look forward. Her hematologist, at Massachusetts General Hospital in Boston, explained that her bone marrow had become overgrown by cancerous immune cells, and that the treatment required high doses of chemotherapy. The doctor described the litany of consequences from the powerful medications: hair loss, infections, and a probable bone marrow transplantation. Helen sat in the clinic of the busy Boston hospital, surrounded by expert oncologists with their novel clinical trials in dismay. She faced either the threat of a serious, potentially life-limiting disease or a heavy-duty regimen of drugs that she felt would similarly poison her body. “There had to be another way,” she said. “I didn’t want chemotherapy.”

Helen did choose another way, based on her suspicion about why she had fallen sick in the first place. “It had to be my diet,” she told me. So, against all professional medical advice, she started an experiment on herself.

Nutrition has a role in today’s medicine, but it’s nothing compared to pharmaceutical therapy’s. Doctors favor drugs over everything else. The average older adult in this country is on four medications a day, which helps explain why, in the past few years, companies like Pfizer, Eli Lilly, and GlaxoSmithKline have enjoyed profit margins of 20 percent or more. But the never-ending rise of drug costs is shaking our already buckling healthcare system. A year of cancer drug therapy, for example, costs more than $100,000 per patient. Yet the case for prescribing drugs remains strong, mostly because they work: HIV has become a chronic illness rather than a death sentence in the U.S., some cancers shrink away in response to targeted therapies, and, in a recent example, the recent Ebola outbreak led to the swift development of vaccines and therapies that may assuage future epidemics.

Despite the economic success and reasonable efficacy of the pharmaceutical industry, some patients disenchanted by the costs and side effects of drugs have been flocking to other solutions. Nutritional therapies have become an attractive self-treatment. Like Helen, more than 75 percent of patients diagnosed with cancer will try dietary and other alternative therapies and many do not disclose them to their doctors, relying instead on the advice of friends or the media. Similarly, those with chronic diseases like multiple sclerosis and inflammatory bowel disease go on dietary regimens without informing their physicians. But most doctors never ask patients what they are eating, and focus instead on finding the right prescription medication—or often a cocktail of medications.

There’s a nutrition gap in America, a disconnect between how patients and clinicians perceive diet. That space has been largely filled by opportunists like Gwyneth Paltrow and Dr. Mehmet Oz, obscuring many meaningful advances in understanding how nutrition influence health.

When I asked Helen about this disconnect, she felt most doctors “don’t get it.” Every physician she saw recommended starting chemotherapy quickly, especially given that she was so young and the cancer so aggressive. “I was frightened,” Helen said. “I knew I had a serious disease, but I felt like most doctors I spoke to weren’t open to other options for treatment.” Partly because of that, Helen began to be convinced that diet had caused her disease, and started a blog on the subject. Within a month, in August of 2009, she had made her decision. She declined chemotherapy and boarded a plane, headed for a clinic just across the border in Baja, Mexico, to begin nutritional therapy for her cancer. “I was going on the Gerson diet,” she said.

I had stumbled across Helen’s blog last year. As a physician, I was unnerved by her approach and agreed with her doctors: Multiple myeloma requires an aggressive, chemotherapeutic strategy, especially in someone so young. Skipping chemo in favor of a dietary cure would almost certainly reduce her chances of survival—not unlike doing nothing at all to address the disease. Yet throughout medical history, renegades like Helen have given us plenty of insights about how diseases work, and how they might be better treated. Could Helen be onto something? Might the Western diet be responsible for more than the obesity epidemic? Could food be both the cause and the cure of some of our modern diseases?

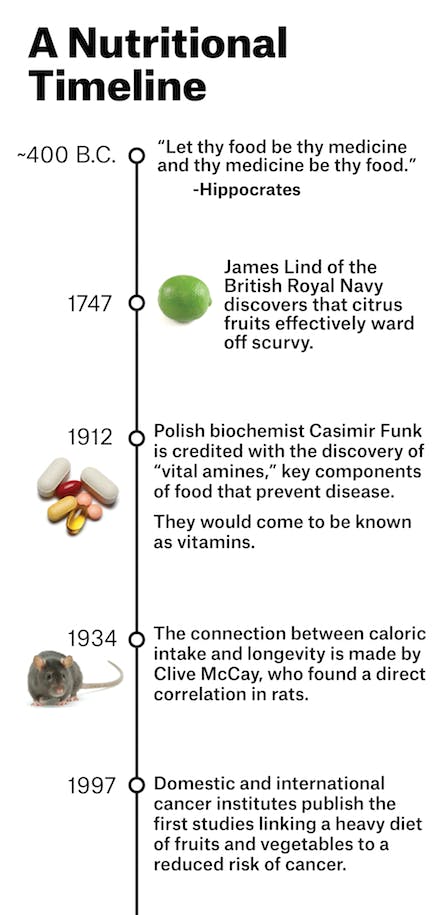

“She’s crazy,” an oncology colleague of mine said when I told him about Helen’s decision. But what we think of as crazy in medicine ebbs and flows; past mistakes resurge into cures, modern miracles become fads, the therapy in vogue fades away. And nutrition—for centuries—has been embedded in the core of medical practice. The very physician after whom we take our oath in medical school to “do no harm,” Hippocrates (circa 400 BC), believed that “all disease begins in the gut,” and frequently prescribed nutritional regimens to treat the ill. Throughout the nineteenth and early twentieth centuries, patients afflicted with life-threatening diseases went on retreats to sanatoriums stocked with farm-raised fruits, vegetables, and fresh cow’s milk.

Just before the dawn of the pharmaceutical industry in the first half of the twentieth century, doctors realized that food contained specific properties that could heal the sick. After sailors began dying on long, oceanic voyages, it was discovered that their disease (scurvy) could be prevented with fruits and vegetables; orphans and prisoners with a vicious whole-body rash and a progressive dementia (pellagra) could be rescued by supplementing their diets with niacin; an epidemic of paralysis and madness among the affluent who had begun eating polished-white rice (beriberi) could be reversed by adding thiamine back into the grain.

Encouraged by the discovery of these compounds found in certain foods, subsequently named vitamins, a flurry of studies began on dietary cures. In the early 1920s, Dr. Sidney Haas, an American pediatrician, saw children coming into his clinic emaciated and bony, even though parents fed their children three times a day. Mystified, Haas trialed them on a “banana diet,” of mostly fruit. He instructed the parents to keep children away from refined sugars and carbohydrates that had become popular in the United States. Almost immediately, the sick children bulked up and their symptoms of malnutrition disappeared. The children had celiac disease; Haas had stumbled on the first iteration of the gluten-free diet as therapy.

As the pharmaceutical industry entered its golden era, the medical establishment grew interested in empirical data, randomized controlled trials, and regulation. As alliances between pharmaceuticals and physicians grew stronger, noted medical societies were quick to filter out doctors who practiced non-pharmacologically based medicine that increasingly appeared to be on the fringe.

Max Gerson, a German Jewish physician who fled Bielefeld, Germany, to New York City in the 1930s, had begun practicing a dietary program that raised eyebrows, even though his work had only built on nutritional practices started by the likes of Haas and his colleagues. “Salt should be entirely eliminated. Vegetables showed be stewed in their own juices. Tobacco, sharp spices, tea, coffee, cocoa, chocolate, alcohol, refined sugar, refined flour, ice cream, cake, preserves, oils,” are forbidden, he wrote.

While in Germany, Gerson had created a regimen devoid of processed foods and had published several cases of patients forever cured of migraines, arthritis, and even forms of tuberculosis. He surprised himself most when, in 1928, a patient with cancer of the bile ducts asked for his diet. Initially, he refused, but caved to her persistence. Six months later, he announced her cure. Two more cancer cures followed.

But Gerson ran into resistance when he began applying his dietary regimen at Gotham Hospital in New York City in the 1940s. Suspicious of his methods, the Journal of the American Medical Association asked for copies of Gerson’s data. When he failed to provide the reputed medical journal the requested information, the editors responded. In a widely read 1946 JAMA article, editor Dr. Morris Fishbein (who actually made much of his reputation busting medical flimflammery) denounced the Gerson diet as lacking significant evidence in the treatment of cancer. In a follow-up editorial in 1949, Fishbein published a criticism of Gerson’s approach in a review entitled “Frauds and Fables,” labeling Gerson a charlatan.

Subsequently, both the American Medical Association and the American Cancer Society blacklisted the Gerson regimen. Insurance companies stopped providing compensation for the therapy and most physicians denied any association with Gerson’s methods. His colleagues were often derisive of his unorthodox methods. “The knife of the AMA was at my throat,” Gerson said in a lecture in California in 1956. Hoping to salvage his credibility, Gerson sent the medical records of 25 successful cases to the National Cancer Institute in Bethesda, Maryland. “Twenty five cases is really not enough for the NCI to make a positive and definitive statement about the efficacy of a therapy,” they wrote back. “We will need a hundred more cases.” Gerson couldn’t attain that many. The New York Medical Society terminated him from their association. Once in exile from Europe during World War II, he found himself again in exile, but this time, from the medical establishment in the United States.

While the Gerson regimen disappeared from most American hospitals, it has survived in the shadows of allopathy. At the end of the summer in 2009, Helen found herself crossing the U.S. border into a beach town in Mexico. “You could see the fence separating the two countries from the hospital window,” Helen’s husband remembers. Although Max Gerson had passed away in 1959, his daughter founded a place to administer his therapies at a hospital near Tijuana, free from the persecution of American medical societies. For three weeks, Helen lived at the facility in Mexico. “I started drinking vegetable juice every hour, for twelve hours a day.”

She found things initially flavorful, a panoply of carrots, green apples, then Swiss chard, green peppers, romaine lettuce, red cabbage. She took five coffee enemas per day. After three weeks of training, Helen returned home. For the next two years, she solely followed Gerson, juicing daily, avoiding forbidden foods, applying daily enemas. “I was actually pretty exhausted that whole time,” she reported to me.

Most doctors today haven’t heard of Gerson. The American Cancer Society still does not believe there is evidence supporting his diet and deems it dangerous. Between 1979 and 1981, at least ten patients on the Gerson diet were admitted to hospitals in Southern California with blood infections by the bacteria Campylobacter fetus, believed to be a side effect of the liver injections that accompany the older versions of the therapy. None of the admitted patients was cured of cancer. Five of the patients were in comas from dangerously low levels of sodium—presumably from the salt-free part of the diet. In 1992, JAMA published a “special communication” again denouncing the therapy. “The poisons in processed foods that proponents of the Gerson therapy say cause cancer have never been identified,” they wrote, disputing the idea that a diet of fruits and vegetables could prevent the process of cancer development. “This makes the Gerson therapy impossible to accept,” they wrote.

The Gerson diet is not the only one that has been largely rejected by the medical establishment. Many doctors consider nutritional therapies to be fringe medicine. “Part of the reason is that the field has been hijacked by people who are too far out in left field,” says Carolyn Katzin, who is a nutritionist herself, affiliated with the UCLA Cancer Center. Dr. Oz, the cardiothoracic surgeon who hosts a popular daily TV show, for example, testified last year at a hearing held by the Senate Subcommittee on Consumer Protection, which has taken issue with Oz’s health claims about certain foods, such as green coffee beans, without the scientific literature to back up his endorsements, recently leading to physicians to draft a letter calling for him to be fired from his faculty position at Columbia University.

Similarly, Dr. Andrew Weil, who created the popular “anti-inflammatory diet,” has been gently ignored by most of the medical community. Arnold Relman, former editor-in-chief of the New England Journal of Medicine, rebuked him in The New Republic for largely ignoring the scientific method in making his recommendations and for using a collections of patients’ anecdotes and testimonials in their place. “It’s a shame,” Katzin says. “Because the study of nutrition has been tainted by this kind of stigma, when in fact there’s probably a lot of importance in the relationship between diet and health. This divide between medicine and nutrition has held us back.” Nutrition, she says, is something today’s conventional doctors need to reclaim.

Dr. Eliot Berson has spent his career studying the retina, the photosensitive film delicately tacked onto the back of the eye. He’s been chasing after a devastating disease called retinitis pigmentosa (RP), an illness that has stumped the pharmaceutical industry for decades and puzzled doctors for more than a century. “Usually,” Berson tells me over coffee, “it starts slowly in young people, in adolescents. A boy or a girl goes into a movie theater and the whole place goes pitch black. Or, they hate getting their picture taken—they say it’s like getting hit by a flash of light,” he says, putting his hands up in front of his eyes.

Berson, 77, is an ophthalmologist—the last person you might expect to think deeply about diet. He has worked at the Massachusetts General Hospital studying RP, a progressive, incurable disease that robs the patient of cells in the eye that process black-and-white vision. “It’s heartbreaking,” he says. “I have had to help people far younger than me to find their way out of my office. Sometimes, I’ve had people ask me if this might be the last day they will ever see light.”

This kind of fear and uncertainty has led many of these people searching for new remedies beyond the scope of what doctors and conventional medicine can offer. “Part of the genesis of my work,” Berson says, “comes from these self-treaters—patients who changed their diets, who tried supplements, who came back and told me what helped their illness and what didn’t.”

Deciding to study these self-treaters more formally, Berson and his colleagues performed food-frequency questionnaires that asked patients specific questions about their dietary intake. To his surprise, he found that people who ate the highest amounts of vitamin A (through food and supplements) had the lowest rates of visual loss—a result unachievable through any available drug.

Wondering if diet might slow the loss of the visual field, Berson’s group performed several clinical trials over the past two decades and found that those who take high doses of vitamin A supplements (about 4 cents per pill) and a diet rich in omega-three fatty acids (specifically one to two 3-ounce servings of oily fish like salmon, tuna, herring, mackerel, or sardines per week) had a 40 to 50 percent slower loss of visual field sensitivity.

“We went around presenting our work at national conferences. When I told the audience that we had been inspired by what our patients had taught us, half the audience laughed and half the audience didn’t,” Berson says. “For many doctors, the answer that diet and supplements might slow this disease was too simple.”

“We doctors are too stubborn and too slow to accept that kind of simplicity,” he continues. “One doctor said he didn’t believe our results until he saw it work in a mouse. So we did that. We tested our hypothesis in a mouse model and got that published. I have great respect for conventional approaches and for science. But my god, if the answer is staring you in the face—that we can help people with minimal risk through cheap, straightforward interventions, I think we have a moral obligation to learn more about it.”

For physicians, our ignorance about food and medicine begins early. Most medical schools only dedicate a few weeks in a crammed schedule to studying nutrition. “We used to have a nice little course on prevention and diet,” says Dr. Walter Willett, a pioneer of nutritional research and a professor of medicine at Harvard Medical School. “Half the students who used to take that course changed their diet afterwards, and the majority felt comfortable talking to patients about their dietary habits. But that course has been lost in the midst of curriculum reform,” he says.

Doctors, largely uneducated in nutrition, leave it to patients to investigate how diet and illness interact. Patients start experiments to treat themselves. Entire networks of patients have developed online communities sharing and exchanging details of how food is affecting their Crohn’s disease, or depression, or lupus.

Some self-treating patients have gotten their message out this way. Terry Wahls, a physician with progressive multiple sclerosis (MS), documented her dietary redesign in a TED Talk on how eating a nutritionally dense diet allowed her to escape her wheelchair; she now bikes five miles to work each day. The TED video has been viewed more than two million times, and the Wahls Protocol, as she calls it, has been adopted by countless MS patients.

But this patient-driven faith in diet, however earnestly pursued, relies upon anecdote. Science, not anecdote, is ultimately the language of contemporary doctors, and for the medical community to take nutrition as seriously as patients do, doctors need data. That usually means a randomized controlled trial (RCT), in which one group of patients are tested on with an experimental therapy while another group receives a placebo. But RCTs are particularly tricky to execute when testing a nutritional intervention: Patients trialing a diet must essentially adopt a new lifestyle three or more times a day and stick to it for years before an outcome might be observed.

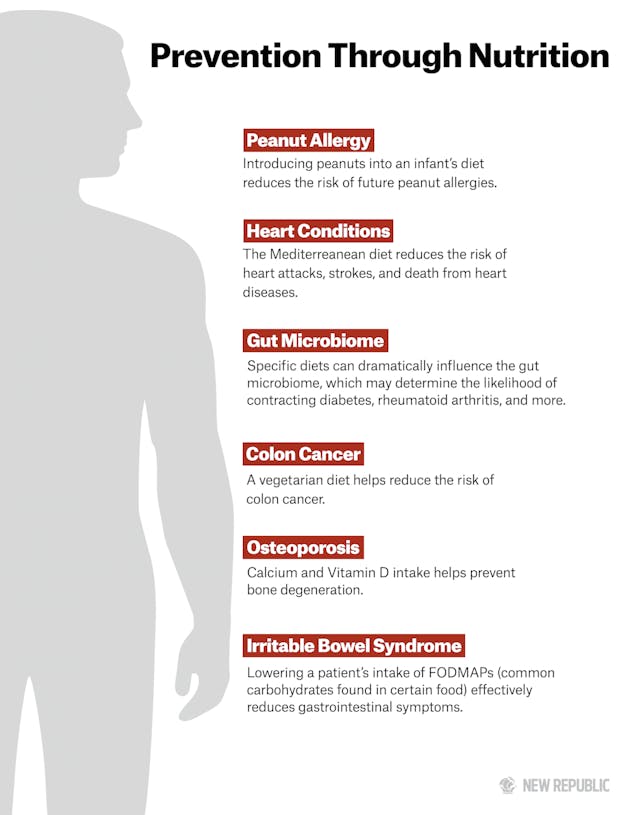

Not that there haven’t been large randomized controlled trials in nutrition: In 2013, a study in The New England Journal of Medicine made waves after investigators found that switching patients to a Mediterranean diet rich in olive oil, nuts, beans, and fish reduced the risk of heart attacks, strokes, and death from heart disease by 30 percent.

Yet even with positive nutritional data, pharmaceuticals continue to trump results from even powerful dietary studies. It’s far too easy for both doctors and patients to reach for medications, while going through a dietary redesign seems disruptive and unappealing. Making the case for nutritional therapy over pharmaceuticals isn’t easy—it’s a struggle that Gary Wu, the associate chief of research in the Division of Gastroenterology at the University of Pennsylvania, thinks about constantly.

Wu treats inflammatory bowel disease, a relentless abdominal disorder affecting more than one million Americans. Many patients take tumor necrosis factor (TNF) inhibitors, a category of drugs that have led to dramatic improvements in the natural course of the disease—at a cost of $15,000 to $25,000 per patient per year. But many patients are required to stay on these drugs life-long, through periodic infusions or self-injections, and some still end up requiring bowel surgery or fail to improve. So Wu’s group is currently studying elemental diets—a regimen composed of components of food in its simplest form—and has found that the therapy works almost as well as TNF inhibitors. “We’ve been focused for so long on pharmacologic agents that nutrition has never really been in the mainstream. But other countries, Japan, Israel, Canada, some parts of the United States, have been trying to use diet to treat inflammatory bowel disease. There’s a growing momentum that nutritional interventions do work.”

Yet moving a dietary intervention from anecdote to accepted therapy is nearly impossible. While Wu and Berson have been successful at this, our current environment is primed to develop conventional pharmaceuticals rather than advocating nutritional therapy. If a researcher wants to test the efficacy of a drug in humans, the Food and Drug Administration requires the scientist to submit an Investigational New Drug Application (IND) which can cost upwards of a million dollars, a steep fee but otherwise affordable for pharmaceutical companies. But recent guidance from the FDA similarly requires that foods being tested for the management of illness also mandate submission of an IND if those foods are to be studied in humans, a significant hurdle for academics and researchers who cannot invest in such a potentially expensive gamble. Consequently, nutritional therapies have a hard time reaching the stage of serious clinical trials. They never receive the FDA’s blessings and stagnate in the dark corner of the local vitamin shop, lost to the mainstream.

Meanwhile, despite such hurdles to nutritional study, evidence continues to accumulate that the components of everyday foods have dramatic effects on our health. Take turmeric, a rooted cousin of ginger. The plant’s active ingredient, curcumin, potently reduces oxidative stress and inflammation, and in early clinical studies has been found to have benefits in liver disease, breast cancer, anxiety, and inflammatory disorders. Fiber, a main component of vegetarian diets, is processed by bacteria into butyrate, a simple molecule that can dampen inflammation in the gut; fiber and butyrate are currently being tested in numerous disorders ranging from Type 1 diabetes to ulcerative colitis.

And, in an emerging field called epigenetics, our genes—once thought to be stalwart blueprints that fate us to certain illnesses—have been recurrently shown to be rewired through the action of diets rich in folate, selenium, and polyphenols from foods such as green tea, apples, and black raspberries. Whole diets, too, can have dramatic effect. Aside from the cardioprotective effects of the Mediterranean Diet, just last month, a study in JAMA revealed that a vegetarian diet cut colon cancer risk by more than 20 percent.

On the flip side, food can contain agents that cause disease. Recently, a study in the New England Journal of Medicine revealed that the compound phosphatidylcholine, found in red meat, is converted in the gut into trimethylamine-N-oxide, a strong risk factor for the development of fatty plaques in the heart vessels, ultimately leading to heart attacks.

These are just a few named molecules; a tip of an untapped iceberg. Whether we are eating turmeric, fiber, or red meat, it’s clear their breakdown products have precise and potent effects on essential systems in the body: hopping onto the backs of enzymatic reactions, tweaking the populations of bacteria that grow in our intestines, tuning our immune systems up or down, or changing the way our genes are interpreted.

We used to study food in broad strokes. Fat, cholesterol, and salt are bad; fruits, vegetables, and whole grains are good. Largely, those observations are proving true, but science is showing the truth to be more subtle: Certain dietary patterns, some foods, or specific nutritive components may affect human illness as potently or precisely as the latest blockbuster synthetic. Only by increasing our focus on nutrition might the distinction between drugs and food begin to dissolve—a focus that must come from medical schools, hospitals, and research groups supported by central and private funding agencies, so that patients like Helen aren’t left to self-experimentation.

Last fall, I drove out to visit Helen and her husband in Swampscott, a small suburb northeast of Boston. I didn’t have a photograph of her, but I thought I might pick her out after having seen what myeloma does to most people. When she introduced herself, with her husband beside her, I was stunned. Her eyes were bright, her face gentle and relaxed without any evidence of chronic illness. “You look really great,” I said. And she did.

Yet her appearance, however glowing, belied her five-year struggle—her trip to Baja, to various clinics, hospitals, and rehabilitation centers; her inner conflicts between her own philosophies and those offered by conventional doctors and alternative practitioners; blood-test results that made her question her decision to forgo chemotherapy. Yet nutrition continued to be a centerpiece of her life. A volunteer at her children’s elementary school, she saw what other children were eating and worried about her own kids’ health. At home, her family followed her lead in dietary change. “Now my husband and children eat real food,” she said, citing almond-flour pancakes, oatmeal, honey, whole grain breads, bananas, berries, kale, spinach, red carrots, and grass-fed meats.

She leaned towards me. “I was wary of you when you contacted me. A lot of doctors are scared off by this stuff,” she said. “But I just think this whole nutrition thing isn’t going to work until conventional doctors start to recognize it might have impact. I think there’s a middle road, where we can combine chemotherapy and nutrition. But we aren’t even there yet. Most doctors don’t consider that diet has a place beside drugs.”

Helen was right. I was wary, and still am. Multiple myeloma is a very serious, often life-threatening illness, for which we have developed therapies using powerful drugs that can extend life by years. Survival rates have improved in the past decade, and some oncologists believe that, through the use of novel medications, the disease might be convertible to a chronic illness. That’s progress and another victory for the pharmaceutical industry and conventional medicine. Given those statistics, and Helen’s young age, I would advise Helen to receive chemotherapy—alongside her nutritional change. I strongly believe that diet can have a profound effect on the course of illness and must be considered by physicians during therapy.

Helen continues to follow a valid conviction, and many follow behind her. Americans want a serious discussion and investigation into how food influences, or even dictates, the development of illness. Science is again stepping into this long-running conversation. Leaps in technology and research have made it possible to understand how food influences our epigenetics, our microbiomes, and our metabolomes. And yet, many of the diseases that have pushed our country into a health-care crisis—obesity, diabetes, inflammatory disorders—continue to be treated and monopolized by expensive pharmaceuticals, while patients are increasingly adopting tools to measure the parameters of their own health. Our society is burdened by the cost of drugs and is ready, culturally, to look into the benefits of dietary change as a preventative or even a therapeutic approach. But are we doctors ready to think outside the pillbox? If not, the nutrition gap won’t close anytime soon.

Helen often gets emails from her readers who ask what they should do next, what they should eat, whether particular foods make any kind of difference, if they should go on chemo alongside their diets.

“But I can’t give them medical advice!” she said. “I’m a patient, not a doctor, and I certainly don’t have all the answers.” She took a drink of water and looked at me. “I wish I did.”

*Not her real name. She requested anonymity because of a pending settlement with an insurance company over an unrelated procedure.