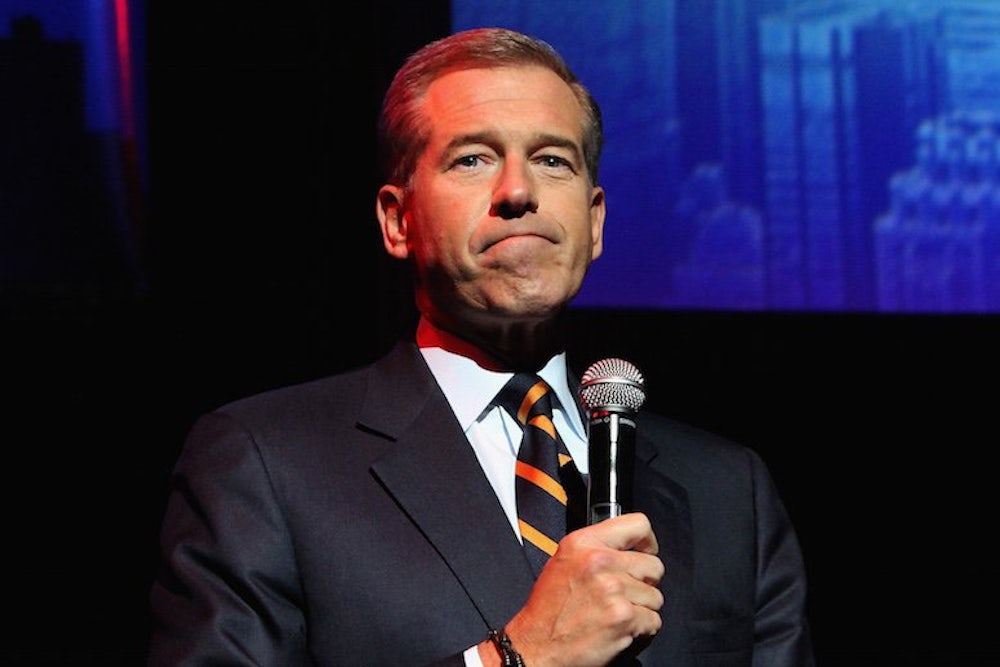

A Politico headline from last week reads, “Why Did Brian Williams Lie?” The New York Post was more poetic: “Lyin’ Brian war scandal engulfs NBC.” And yesterday the gossip site TMZ reported that Williams has been suspended for six months “for lying about being shot at in a helicopter.”

Aren’t we getting a little ahead of ourselves? One reason the Williams story remains fascinating even after the suspension is that we don’t know if he did lie. We know he didn’t tell the truth; he wasn’t, in fact, in a helicopter hit by enemy fire, and it may turn out he wasn’t as close to some of the Hurricane Katrina action as he later recalled. But we don’t know whether he was consciously aware of any untruths he was telling.

This is a nuance many observers don’t have time for—not just Politico, the Post, and TMZ, but lots of people who have derided Williams’s claim that he “conflated” the helicopter he rode in 12 years ago with a different helicopter he saw and reported about that day.

But among people who study the human mind, the “conflation” explanation isn’t at all farfetched. Lots of studies show that a normally operating human brain plays exactly these kinds of tricks on people.

But warped memories are just the tip of the iceberg. When you take a broader look at the landscape of human self-deception, and how it plays out in journalism, the Williams case shrinks almost to the point of insignificance. Other kinds of natural cognitive biases foster more consequential cases of journalistic malpractice. In fact, if you could have magically neutralized them in the years after 9/11, there might have been no Iraq war for Williams to misremember.

The psychologist Elizabeth Loftus put false memory on the map decades ago by showing how easy it is to permanently insert new narrative elements when someone is recalling an event. This kind of research has since moved to the neurological level. Kenneth Norman, a psychologist at Princeton, studies how memories get distorted via “interactions between medial temporal structures and [the] prefrontal cortex.”

On a more anecdotal level: Norman likes to tell students about the time he was recounting to a friend something that had happened to him when he realized that it actually hadn’t happened to him. How did he know? Because it had happened to the friend he was talking to, who had once recounted the experience to Norman—and who now helpfully reminded him of this fact. Norman, somewhat like Williams, had conflated his experience with someone else’s.

The parallels go beyond that. The memory Norman had appropriated was of seeing a famous actor in a particular restaurant. It was a restaurant he had actually been in, and the actor was someone he had seen—just not in person. So the key visual elements for the false memory were in place, ready for a little dramatic tweaking.

So too with Williams: He had been in the kind of helicopter that was hit and damaged, he had made an unplanned landing after experiencing a violent jolt (from the release of heavy equipment the helicopter was carrying), and he had then seen the damaged helicopter on the ground—not to mention subsequently seeing images of it juxtaposed with images of himself. So a minor reshuffling of his mind’s archival imagery was all that was necessary.

Most of us have no way of knowing how far our memories depart from reality, since we rarely find ourselves in the situation Norman found himself in—recounting a “memory” to its actual owner. Nor do we do what Williams did—retell the same stories on videotape over many years and then suddenly have that whole database subjected to crowdsourced fact-checking.

Of course, Williams knew these retellings were being videotaped. And in the case of many of the retellings that are now being scrutinized, he knew there were other witnesses to the original story. And, since he’s not stupid, that probably means his fabrications weren’t conscious and intentional, but, rather, were an illustration of human memory working as human memory often works.

Why would human brains be so fallible? The best guess is that, from the point of view of the brain’s creator, natural selection, unreliable memory is a feature, not a bug.

It makes sense when you think about it: In both Williams’s and Norman’s cases, the false memory put them closer to something important—to a famous actor, to a brush with death. So too with the helicopter pilot who on CNN at first vouched for Williams’s story. He said—and apparently believed—that he had been Williams’s pilot, but it turned out this was just his memory’s way of placing him closer to something important: a star anchorman. He later realized he had actually been flying a helicopter near the one Williams was in.

The foundational premise of evolutionary psychology is that the human brain was designed, first and foremost, to get our ancestors’ genes into subsequent generations. During our evolutionary past, high social status could help do that. Believably telling stories that connect you to important people or underscore your daring can elevate your social status. And the best way to believably tell those stories is to believe them yourself. So genes for this kind of self-deception could in theory flourish via natural selection.

If this was the only kind of natural self-deception—all of us retelling our fishing stories until trout turn into a barracuda—the world would probably be a better place, and journalism would be a more consistently honest enterprise. But unconscious dishonesty runs deeper than that.

Before elaborating, I should say that I know Williams slightly. I haven’t spoken to him, or otherwise communicated with him, in more than three years, and (if memory serves!) I communicated with him on about half a dozen occasions in all the preceding years. But my encounters were always friendly, and I’m sure that makes me look at his plight sympathetically. So judge the above paragraphs in that light.

This source of bias—when a journalist is acquainted with someone who figures in a story—is well known, and the standard remedy is to either do full disclosure or recuse yourself from writing about the story. Which is fine, but what about when the subject of our journalism is someone we may not know yet we still have strong feelings about?

Suppose, for example, that you hated Saddam Hussein. I doubt it’s a coincidence that the journalist who most doggedly and credulously circulated accounts of Hussein’s aiding and abetting Al Qaeda had already advocated war partly on the grounds of how horrible Hussein was.

But I also doubt that, in peddling this story, he was being consciously dishonest. He was just subject—as all of us are—to “confirmation bias.” We’re especially attentive to evidence that supports our predispositions—including the predisposition to believe our enemies are up to no good or that our friends are up to no bad.

In fact, it’s even subtler than that. When our friends do good things, or our enemies do bad things, we tend to attribute these deeds to “dispositional” factors. In other words: that’s just the kind of people they are. But when our friends do bad things, or our enemies do good things, we tend to chalk that up to transient situational factors—peer pressure, etc.

The social scientist Herbert Kelman has spelled out the implications: “Hostile actions by the enemy are attributed dispositionally and thus provide further evidence of the enemy’s inherently aggressive, implacable character. Conciliatory actions are explained away as reactions to situational forces—as tactical maneuvers, responses to external pressure, or temporary adjustments to a position of weakness—and therefore require no revision of the original image.”

Translation: Saddam Hussein may comply with our demands by letting weapons inspectors into his country, but that’s a temporary expedient and won’t change his long-term plans to conquer America. So we must invade. Or, to take a contemporary example: if the Iranian regime does agree to a deal that limits its nuclear program, that’s just a trick—don’t fall for it.

All of this suggests that one of the most important things journalists can do is try to report accurately and soberly about foreign governments that many of their fellow citizens consider enemies. If ever there was a case where they should try to be fair and balanced, this is it.

And if ever there was a case where that’s hard, this is it. The reason isn’t just that journalists naturally form opinions of foreign governments, and these opinions then shape their view of the facts. It’s also because our brains seem to be designed to keep us in good standing with our peers; following the crowd is natural, and fervently nationalistic crowds are especially hard to resist. This alone probably accounts for much of the media’s credulity about Iraq’s supposed weapons of mass destruction.

There’s one more major source of media bias—the fact that journalists in general are under the pressure Brian Williams was under: to tell a good, dramatic story. Granted, most journalists aren’t under the kind of pressure felt in the TV news business these days: to not just tell a dramatic story, but to be visibly at the center of it—and, ideally, visibly imperiled. (What excites CNN executives more than the sight of Anderson Cooper in a flak jacket?) But all journalists are under pressure to tell a story that will get attention—all the more so now that attention is quantifiable in all media. And of course, few things generate attention like fear—of Iraq, Iran, whatever. Scary foreigners make great click bait.

One thing that runs scary foreigners a close second as an attention getter is a scandal involving someone famous. And if that someone famous is “lying,” that makes for a better story than if he’s just being human. It is, as we say in journalism, a story that’s too good to check.