Steven Pinker—the Harvard cognitive scientist who also chairs the Usage Panel of the American Heritage Dictionary—is not a fan of many of the “rules” one finds in modern guides to grammar and usage, not to mention finger-wagging language columns.

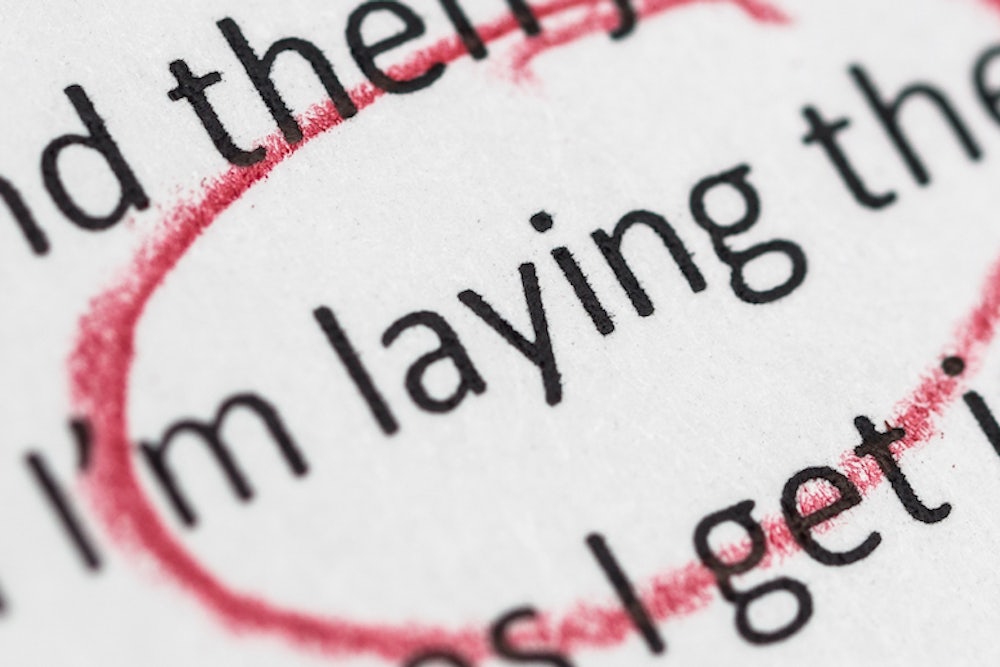

That's part of the reason he wrote The Sense of Style: The Thinking Person's Guide to Writing in the 21st Century, which just came out today: Even though Pinker has long been a fan of Strunk & White's and other style guides, he realized that they are often larded with proscriptions that exist simply because they have been passed down from earlier, different eras, rather than because they are based on any sound grammatical logic or understanding of linguistics (Strunk & White, he notes early on, “misdefined terms such as phrase, participle, and relative clause”). For example, Pinker argues, in many cases it’s perfectly fine—in fact, desirable—to dangle participles, split infinitives, describe things in the passive voice, and engage in various other practices frequently frowned upon by our most authoritative style sources.

But The Sense of Style is also a broader look at why there’s so much bad writing in the world—Pinker takes specific aim at certain types of professional and academic prose that he finds unreadable. And his advice about how to avoid such writing is couched in cognitive-science theories that help him advise readers not just on how to write better, but on why certain decisions lead to smoother, easier-to-parse prose. (He also draws lighthearted examples from frequently interspersed comics ranging from Doonesbury to xkcd.)

In a recent interview with Science of Us, Pinker explained “the curse of knowledge,” offered an explanation for why Twitter debates are so awful, and expounded on the usefulness of the word ain’t.

Jesse Singal: In your book, you laud what you refer to as the “classic style” of writing. Could you run down what it is and why you’re such a fan?

Steven Pinker: Classic style makes writing, which is necessarily artificial, as artificially natural as possible, if you’d pardon the oxymoron. That is, you’re not physically with someone when you write. You’re not literally having a conversation with them, but classic style simulates those experiences and so it takes an inherently artificial situation, namely writing, and it simulates a more natural interaction, the more natural interaction being (a) conversation (b) seeing the world. So two people in the same place, one of whom directs the other’s attention to something in the world, is a natural way in which two people interact and classic style simulates that.

JS: You use the physicist Brian Greene as an example, citing his ability to take incredibly complicated things about string theory and use everyday comparisons to explain them. It seems like there's a striking contrast between his work and academic writing, where even relatively simple concepts can get drowned in words.

SP: Absolutely. And as with the character in the Moliere play who has been speaking prose all his life, a lot of effective communicators use classic style even if they don’t realize it. I didn’t realize it, and one of the reasons that Francis-Noël Thomas and Mark Turner's book Clear and Simple as the Truth: Writing Classic Prose struck a chord with me is that, when I switched from academic to popular writing, I made a number of switches in my style. I knew what I was doing, but I never realized that all the steps that I took were part of a coherent attitude towards writing and classic style is that attitude.

JS: Early on you have some harsh words about the habit of hedging—when you hedge too much in your writing, the book argues, it weakens your argument and robs you of authority. But at the same time, you sort of have to hedge if you want to write in a careful, fair way, right? How do you find the balance?

SP: I think there is a difference between hedging and qualifying. So when you qualify, you state the circumstances in which a generalization does or does not hold. One can list the uncertainties or areas of or limitations of a study. One could certainly state the contrary evidence. All of that, as you know, is mandatory.

The problem is that academics hedge thoughtlessly. It is almost a tic or a habit that rather than being as precise as possible about what the strengths and weaknesses of an argument are they just drop qualifiers and hedges; they sprinkle their prose with hedges, as a measure of self-defense. So every sentence has a virtually or partially or so to speak in it, which adds no precision to the extent to which one ought to believe the finding. It is almost like the habit in journalism, in crime journalism, of droppingallegedly in every sentence. It is purely a measure of self-defense.

JS: How much of that tendency comes from postmodern and poststructuralist fields where academics have very different ideas of what knowledge is and what can be proven? Do you think that some of those fields have done damage to academic writing?

SP: Oh absolutely, yeah. Unquestionably. Because by far the worst writing in academia comes from postmodernist scholars, notoriously so. When Denis Dutton ran his bad academic writing contest in the late 1990s, it was postmodernists and other similar literary scholars who won the award year after year.

Postmodernism is an extreme exaggeration of a stance which all academics have to some extent: we don’t open our eyes and just see the world as it is. We understand the world through our theories and constructs. We are constantly in danger of being misled by our own unconscious biases and assumptions. Gaining knowledge about the world is extremely difficult, so all of those qualifications are certainly true, and even scientists who believe in an objective reality acknowledge the fragility and difficulty of obtaining knowledge.

But postmodernism takes that to the lunatic extreme of denying that there is such a thing as objective, as the real world or objective reality or truth or knowledge at all. The problem is that one can be fully aware of all these epistemological issues, how hard it is to gain knowledge, but not let it cloud up one’s writing. That is, in writing one can for the purpose of exposition adopt a fiction that there is an objective world that you can know just by looking at it even if one is not committed to that as as an actual statement. So it is an indispensable fiction even if it is a fiction.

JS: The other category of bad writing you’re pretty hard on consists of phrases like, "Significantly expedite the process of," instead of just saying "Speed up.” I can understand constant hedging for purposes of “covering your anatomy,” as you put it in the book. But why does this kind of horrible writing persist?

SP: I don’t think we know completely. There may be several dynamics going on. One of them would be an attempt to sound serious, high-falutin, ponderous, not a flibbertigibbet. Another is that if you’re a professional, if you don’t just think about things, but about how to think about things, then you have to go meta. You have to go abstract. You don’t just think about crime, you think about the perspective of criminal law enforcement. You think about the way other people have dealt with the problem, you think about your reaction to the way people have dealt with the problem and so you start to lose your moorings in the actual event itself.

Those stuffy, turgid words are meta-concepts, concepts about concepts, like level, perspective, variable, framework, context. These refer to ways of thinking about problems, which is what professionals do, and when you spend your life doing it you start to forget that there’s a real world that all these things are about.

JS: And that ties in with the so-called “curse of knowledge,” writers sometimes don’t understand that their readers aren’t coming from their same intellectual world.

SP: Exactly. It’s very hard to imagine what it’s like not to know something that you know or to not think about something in the way that you think about it. And if you’re a professional and you’ve spent your life thinking about it, and that’s what you do for a living, you tend to think about it in different terms than the consumers of that knowledge who are interested in the things themselves.

JS: I was sort of seduced by this idea of taking a “so sue me” approach to writing—assuming that readers will react to disagreements with your work in good faith and not operate under the assumption that you’re an offensive monster. But then if you look at the sort of discourse we have going online, and how debates play out on Twitter, the assumption does often seem to be exactly that. Don’t you feel like your average 25-year-old writer is now coming up in an age in which they cannot always be certain that people will treat their prose fairly?

SP: Yeah. That is why I don’t read responses to what I write on Twitter. I find that a waste of time for exactly that reason. In general, there is a well-accepted body of linguistics theory that dates back originally to Paul Grice, which says that ordinary conversation would be impossible unless there was some degree of charity. That is, you read between the lines, you connect the dots. If you did not do that, conversation would be impossible; it would be unbearably pedantic or legalistic, because conversation relies on some degree of cooperation between the speaker and the listener.

The exception that proves the rules is the language of legal contracts, where almost by definition there is no cooperation. You assume that there is an adversarial relationship and legalese as a result is impossibly convoluted precisely because it tries to anticipate every conceivable objection and loophole and meet them in advance.

JS: You’re not a fan of the “Gotcha gang,” as you call them—folks who take a narrow view of usage that often relies on questionable rules. You write, “In their zeal to purify usage and safeguard the language, they have made it difficult to think clearly about felicity and expression and have muddied the task of explaining the art of writing.” Can you expand on that a little?

SP: Absolutely. Many purists have remarkably little curiosity about the history of the language or the scholarly tradition of examining issues and usage. So a stickler insists that we never let a participle dangle, that you can’t say, “Turning the corner, a beautiful view awaited me,” for example. They never stopped to ask, “Where did that rule come from and what is its basis?” It was simply taught to them and so they reiterate it.

But if you look either at the history of great writing and language as it’s been used by its exemplary stylists, you find that they use dangling modifiers all the time. And if you look at the grammar of English you find that there is no rule that prohibits a dangling modifier. If you look at the history of scholars who have examined the dangling modifier rule, you find that it was pretty much pulled out of thin air by one usage guide a century ago and copied into every one since, And you also find that lots of sentences read much better if you leave the modifier dangling.

So all of these bodies of scholarship, of people who actually study language as it’s been used, language as its logic is dictated by its inherent grammar—that whole body of scholarship is simply not something that your typical stickler has ever looked up.

JS: It sounds like the culprit here is outdated or useless rules.

SP: Yes, combined with the psychology of hazing and initiation rites, namely, “I had to go through it and I’m none the worse—why should you have it any easier?”

JS: Let’s talk about the word "ain’t." "Ain’t" is such a great American word and it’s a microcosm for a lot of class and race stuff that always seeps into these conversations.

SP: Absolutely. It’s not specifically American—I’m pretty sure it goes back to British-English prior to the settlement of the Americas. But "ain't" is the contraction of isn’t, hasn’t, or doesn’t that was not part of the standard dialect of English, meaning it was part of a particular variant of English spoken in London and other parts of southern England. Many features of that dialect became standardized when literacy increased, when printed matter became cheaper and more available, when there was a desire among people in government and education to settle down on one form of the language.

"Ain't" had the bad luck of not being spoken by the upper classes in London, so it was stigmatized as coarse, ungrammatical, incorrect, and so on, even though on its merits there’s nothing wrong with it. In fact, it even has some nice properties, namely it doesn’t have that ugly sibilant and is a single syllable. And that’s why ain’t, even though of course no one uses it in formal writing, is alive and well in other contexts, one of them being the lyrics of popular songs. "Ain’t she sweet," or "it ain’t necessarily so." Just try substituting "isn’t" or "hasn’t" into any of those lyrics and you can see why a lyricist would always go with "ain’t" over "isn’t," which is just ugly.

JS: Popular music would be unlistenable if people followed all these rules.

SP: Absolutely—likewise don’t instead of doesn’t. It’s much more euphonious. Together with probably harmonizing with the general feeling that popular song lyrics are the kind of thing that would be spoken by a common person as opposed to hoity-toity intellectuals. And ain’t also survives in certain expressions that are designed to carry homespun truths. It ain’t over till the fat lady sings, or That ain’t chopped liver. In fact it’s even sometimes sophisticated scholars who use ain’t in scholarly articles to make the point that some statement is so obvious that it is not even worth debate, as if to say that anyone with a lick of sense can see it. This often happens in skilled language use. You use some general belief about language and repurpose it to make a viewpoint.

JS: What do you find to be the most grating thing that grammarians wrongly claim is an error?

SP: The split infinitive is the paradigm case of a bogus rule. Writing that tries to avoid split infinitives can be god-awful. The split infinitive is often a far better stylistic choice. Perhaps the most famous example was when John Roberts administered the oath of office to Barack Obama in January, 2009 and un-split a verb in the oath of office. He’s a famous stickler. He almost precipitated a constitutional crisis because it wasn’t clear whether the administration of the oath of office was legitimate in its transfer of power, so they repeated the ceremony in private later that afternoon, where Roberts, presumably gritting his teeth and holding his nose, repeated the verbatim oath as stipulated in the Constitution.

JS: And as you write, it proved he’s not a strict constructionist.

SP: Exactly. He unilaterally amended the constitution just to avoid the split verb which after a lifetime of stickling struck him as ungrammatical.

JS: How about actual errors that, despite your live-and-let-live approach to these questions of usage, you find really do grate on you?

SP: I would say it’s malaprops—that is, the use of a fancy word as a hoity-toity synonym for a similar word without realizing that it differs in meaning. And this can be not just with literary words like using fulsome to mean full, Which can get you into trouble because being fulsome is a bad thing not a good thing. So if you thank someone for a fulsome introduction, you’re actually insulting them.

Everyday idioms and slang have a correct and a sloppy usage as well. One could use the term “urban legend” to refer to Mayor LaGuardia, meaning a legend in the city, or “Hot-button” to refer to a hot topic. “Hot-button” originally referred to something that was immediately emotionally controversial, meaning it pressed people’s buttons—it wasn’t just stylish or en vogue. Another example is “politically correct” used to refer to something newly stylish as opposed to something dogmatically leftist. The mangling of everyday idioms gets under my skin, I have to admit.

JS: Do you think there will ever be a generation that does not think that “kids these days” do not know how to write and are destroying English?

SP: I doubt it for a couple of reasons. One of them is that language always changes and as you witness language changing out from beneath your feet you, you’re always likely to perceive it as declining. The other is that as people get older, they change; they pay more attention to fine points of usage, and as you pay more attention to something you notice breaches more easily and you confuse changes in yourself with changes in the times. So people also think, at all times, that crime is increasing, that litter is increasing, that disorder is increasing, and I wrote another book on all of that and why that is wrong.

But there is a well-known phenomenon that people, because they confuse changes in themselves with changes in the times, as they get older they tend to get more conservative. They tend to get more cynical about the direction of humanity.

This interview has been edited for length and clarity.

This piece originally appeared on Science of Us, New York magazine's science blog.